Layer Ensembles

Paper and Code

Oct 10, 2022

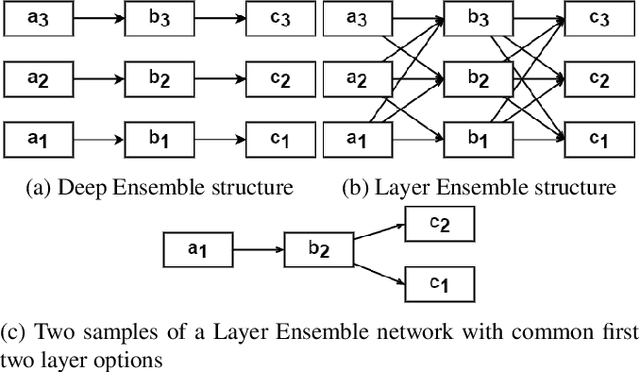

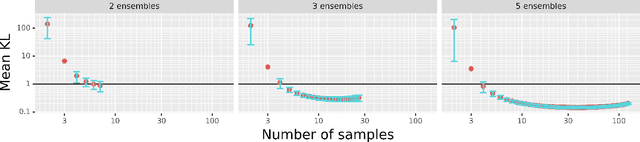

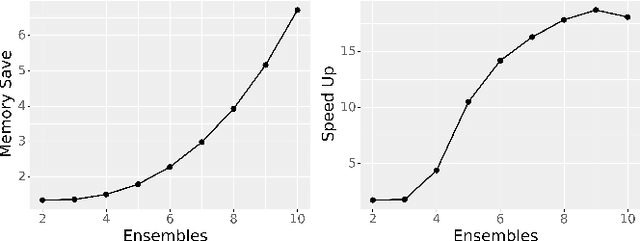

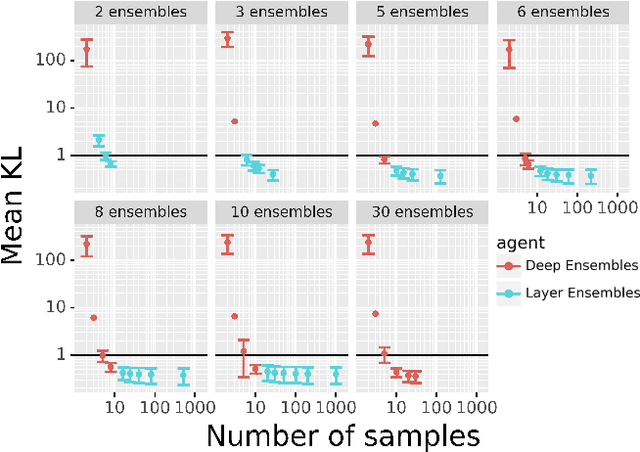

Deep Ensembles, as a type of Bayesian Neural Networks, can be used to estimate uncertainty on the prediction of multiple neural networks by collecting votes from each network and computing the difference in those predictions. In this paper, we introduce a novel method for uncertainty estimation called Layer Ensembles that considers a set of independent categorical distributions for each layer of the network, giving many more possible samples with overlapped layers, than in the regular Deep Ensembles. We further introduce Optimized Layer Ensembles with an inference procedure that reuses common layer outputs, achieving up to 19x speed up and quadratically reducing memory usage. We also show that Layer Ensembles can be further improved by ranking samples, resulting in models that require less memory and time to run while achieving higher uncertainty quality than Deep Ensembles.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge