KTBoost: Combined Kernel and Tree Boosting

Paper and Code

Feb 11, 2019

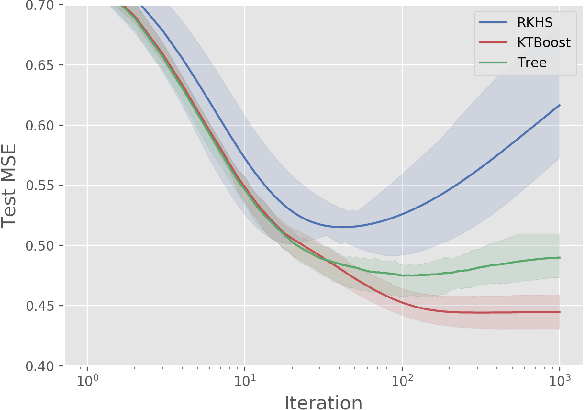

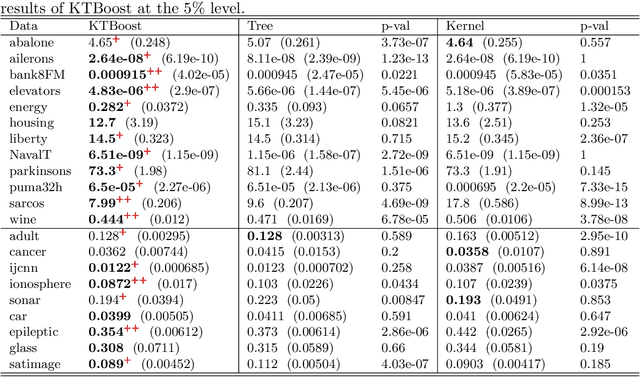

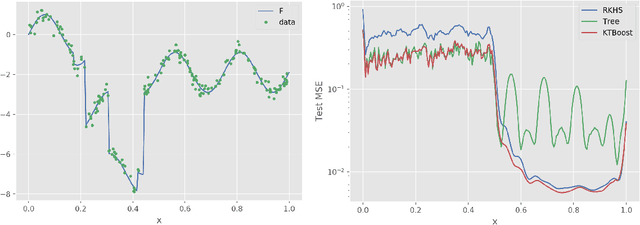

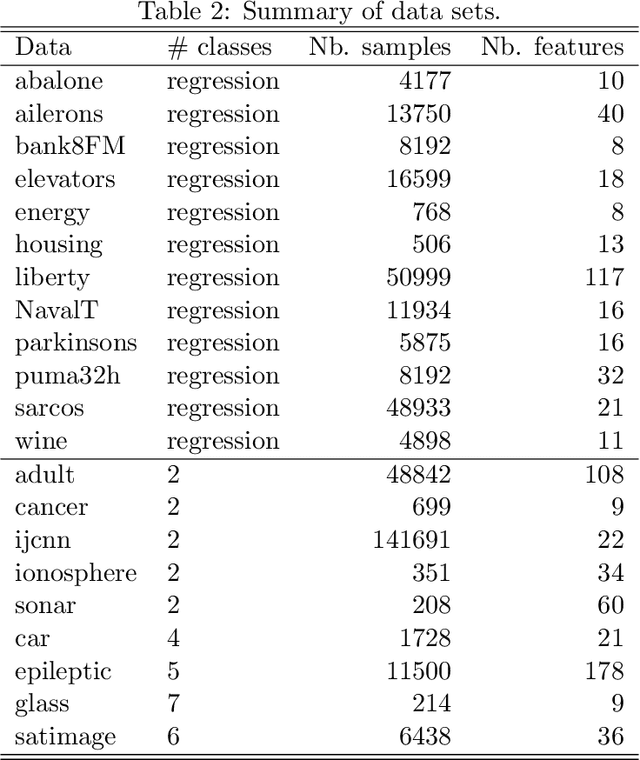

In this article, we introduce a novel boosting algorithm called `KTBoost', which combines kernel boosting and tree boosting. In each boosting iteration, the algorithm adds either a regression tree or reproducing kernel Hilbert space (RKHS) regression function to the ensemble of base learners. Intuitively, the idea is that discontinuous trees and continuous RKHS regression functions complement each other, and that this combination allows for better learning of both continuous and discontinuous functions as well as functions that exhibit parts with varying degrees of regularity. We empirically show that KTBoost outperforms both tree and kernel boosting in terms of predictive accuracy on a wide array of data sets.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge