Knowledge Enhanced Neural Networks for relational domains

Paper and Code

May 31, 2022

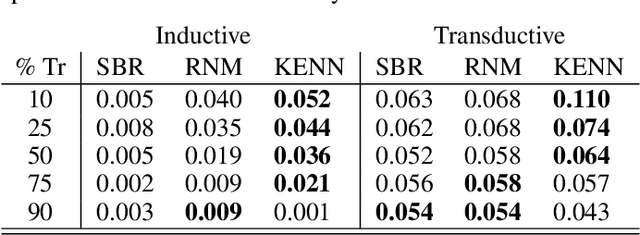

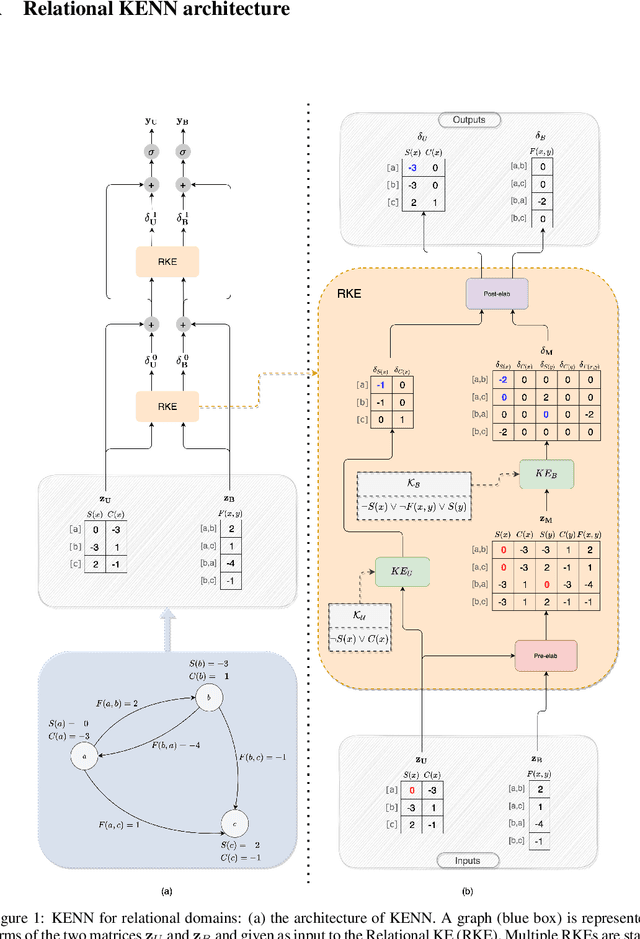

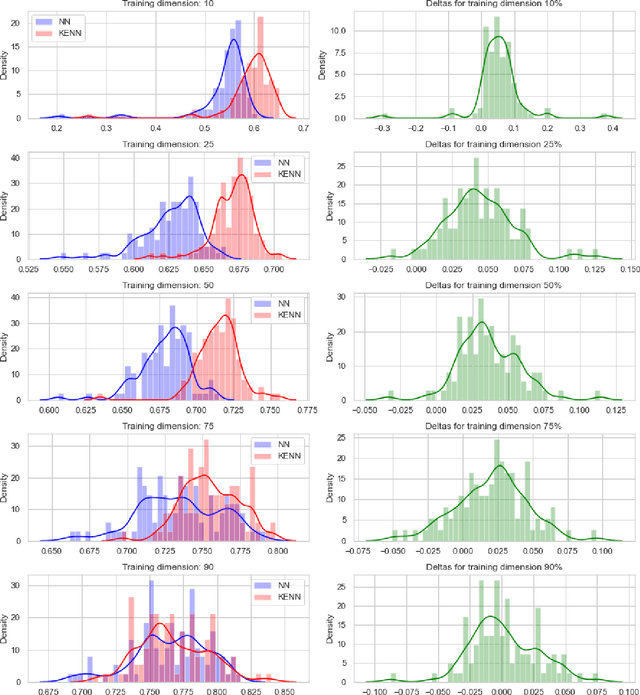

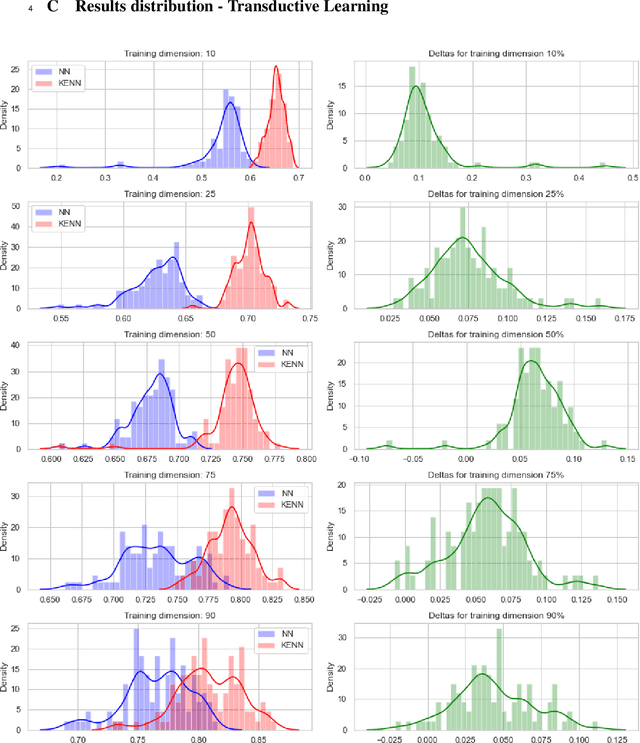

In the recent past, there has been a growing interest in Neural-Symbolic Integration frameworks, i.e., hybrid systems that integrate connectionist and symbolic approaches to obtain the best of both worlds. In this work we focus on a specific method, KENN (Knowledge Enhanced Neural Networks), a Neural-Symbolic architecture that injects prior logical knowledge into a neural network by adding on its top a residual layer that modifies the initial predictions accordingly to the knowledge. Among the advantages of this strategy, there is the inclusion of clause weights, learnable parameters that represent the strength of the clauses, meaning that the model can learn the impact of each rule on the final predictions. As a special case, if the training data contradicts a constraint, KENN learns to ignore it, making the system robust to the presence of wrong knowledge. In this paper, we propose an extension of KENN for relational data. One of the main advantages of KENN resides in its scalability, thanks to a flexible treatment of dependencies between the rules obtained by stacking multiple logical layers. We show experimentally the efficacy of this strategy. The results show that KENN is capable of increasing the performances of the underlying neural network, obtaining better or comparable accuracies in respect to other two related methods that combine learning with logic, requiring significantly less time for learning.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge