Knowledge Distillation $\approx$ Label Smoothing: Fact or Fallacy?

Paper and Code

Feb 06, 2023

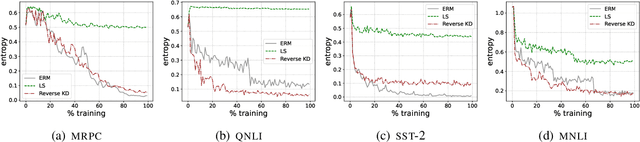

Contrary to its original interpretation as a facilitator of knowledge transfer from one model to another, some recent studies have suggested that knowledge distillation (KD) is instead a form of regularization. Perhaps the strongest support of all for this claim is found in its apparent similarities with label smoothing (LS). This paper investigates the stated equivalence of these two methods by examining the predictive uncertainties of the models they train. Experiments on four text classification tasks involving teachers and students of different capacities show that: (a) In most settings, KD and LS drive model uncertainty (entropy) in completely opposite directions, and (b) In KD, the student's predictive uncertainty is a direct function of that of its teacher, reinforcing the knowledge transfer view.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge