Knowledge-based Reasoning and Learning under Partial Observability in Ad Hoc Teamwork

Paper and Code

Jun 01, 2023

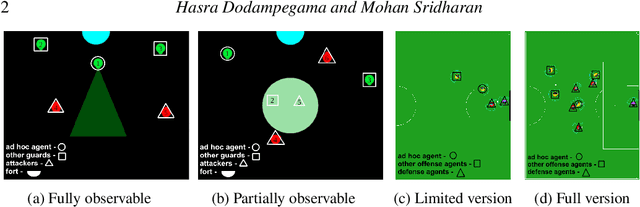

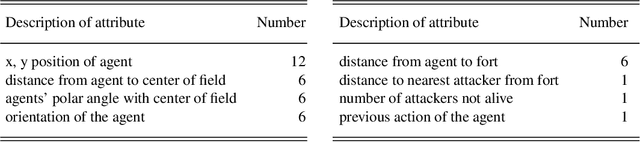

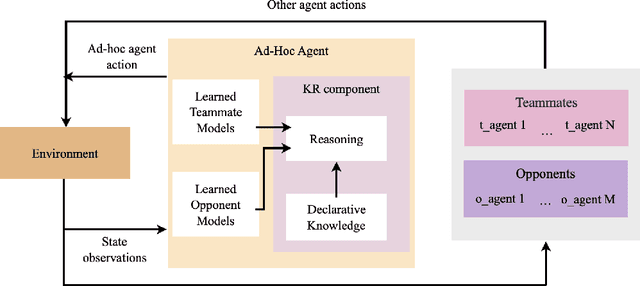

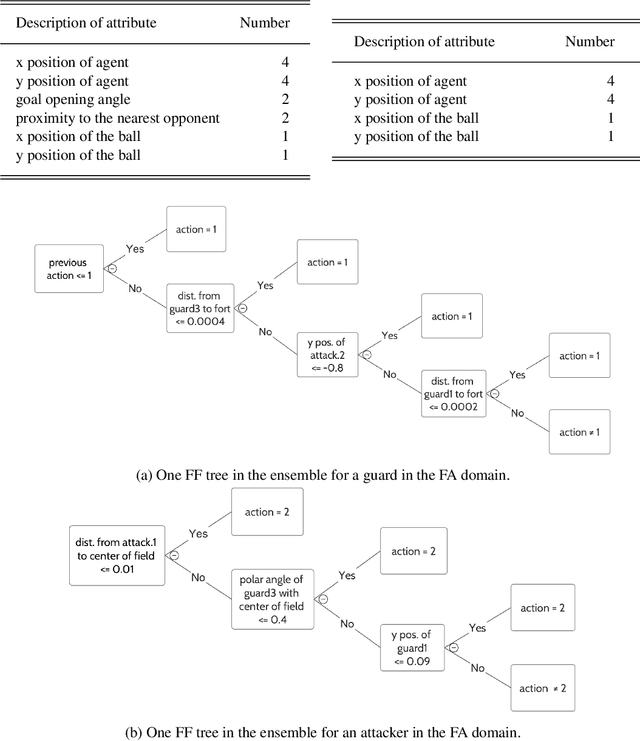

Ad hoc teamwork refers to the problem of enabling an agent to collaborate with teammates without prior coordination. Data-driven methods represent the state of the art in ad hoc teamwork. They use a large labeled dataset of prior observations to model the behavior of other agent types and to determine the ad hoc agent's behavior. These methods are computationally expensive, lack transparency, and make it difficult to adapt to previously unseen changes, e.g., in team composition. Our recent work introduced an architecture that determined an ad hoc agent's behavior based on non-monotonic logical reasoning with prior commonsense domain knowledge and predictive models of other agents' behavior that were learned from limited examples. In this paper, we substantially expand the architecture's capabilities to support: (a) online selection, adaptation, and learning of the models that predict the other agents' behavior; and (b) collaboration with teammates in the presence of partial observability and limited communication. We illustrate and experimentally evaluate the capabilities of our architecture in two simulated multiagent benchmark domains for ad hoc teamwork: Fort Attack and Half Field Offense. We show that the performance of our architecture is comparable or better than state of the art data-driven baselines in both simple and complex scenarios, particularly in the presence of limited training data, partial observability, and changes in team composition.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge