KITE: A Kernel-based Improved Transferability Estimation Method

Paper and Code

May 01, 2024

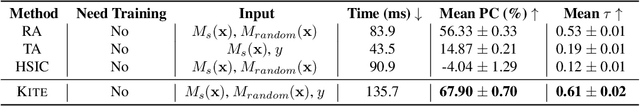

Transferability estimation has emerged as an important problem in transfer learning. A transferability estimation method takes as inputs a set of pre-trained models and decides which pre-trained model can deliver the best transfer learning performance. Existing methods tackle this problem by analyzing the output of the pre-trained model or by comparing the pre-trained model with a probe model trained on the target dataset. However, neither is sufficient to provide reliable and efficient transferability estimations. In this paper, we present a novel perspective and introduce Kite, as a Kernel-based Improved Transferability Estimation method. Kite is based on the key observations that the separability of the pre-trained features and the similarity of the pre-trained features to random features are two important factors for estimating transferability. Inspired by kernel methods, Kite adopts centered kernel alignment as an effective way to assess feature separability and feature similarity. Kite is easy to interpret, fast to compute, and robust to the target dataset size. We evaluate the performance of Kite on a recently introduced large-scale model selection benchmark. The benchmark contains 8 source dataset, 6 target datasets and 4 architectures with a total of 32 pre-trained models. Extensive results show that Kite outperforms existing methods by a large margin for transferability estimation.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge