Kernel Two-Sample Tests in High Dimension: Interplay Between Moment Discrepancy and Dimension-and-Sample Orders

Paper and Code

Dec 31, 2021

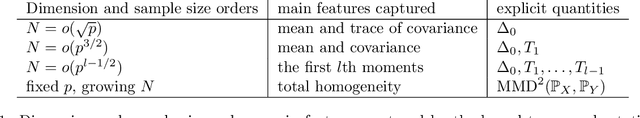

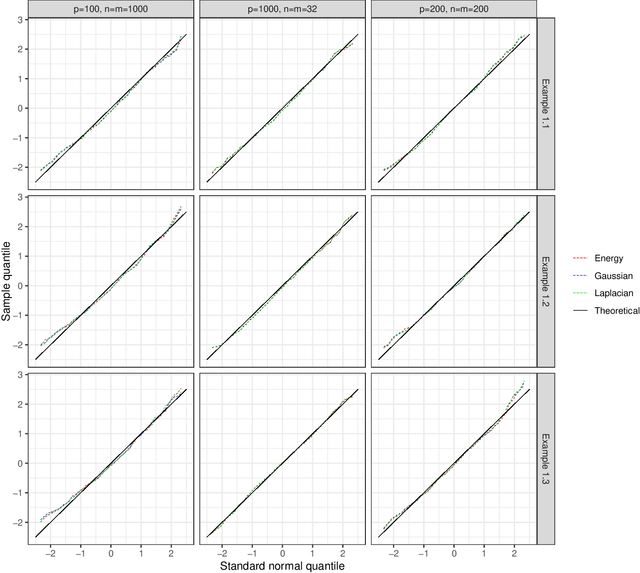

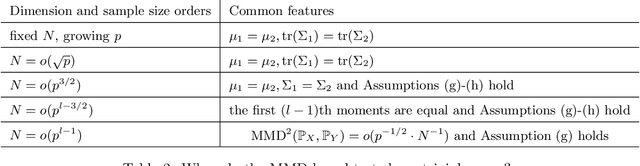

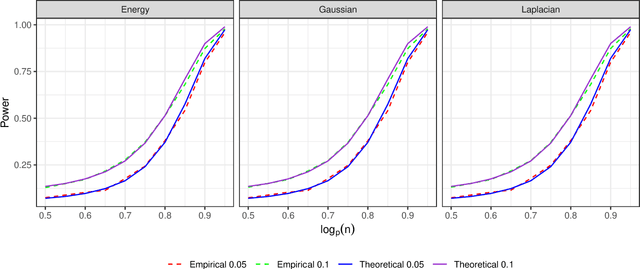

Motivated by the increasing use of kernel-based metrics for high-dimensional and large-scale data, we study the asymptotic behavior of kernel two-sample tests when the dimension and sample sizes both diverge to infinity. We focus on the maximum mean discrepancy (MMD) with the kernel of the form $k(x,y)=f(\|x-y\|_{2}^{2}/\gamma)$, including MMD with the Gaussian kernel and the Laplacian kernel, and the energy distance as special cases. We derive asymptotic expansions of the kernel two-sample statistics, based on which we establish the central limit theorem (CLT) under both the null hypothesis and the local and fixed alternatives. The new non-null CLT results allow us to perform asymptotic exact power analysis, which reveals a delicate interplay between the moment discrepancy that can be detected by the kernel two-sample tests and the dimension-and-sample orders. The asymptotic theory is further corroborated through numerical studies. Our findings complement those in the recent literature and shed new light on the use of kernel two-sample tests for high-dimensional and large-scale data.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge