Judge a Sentence by Its Content to Generate Grammatical Errors

Paper and Code

Aug 20, 2022

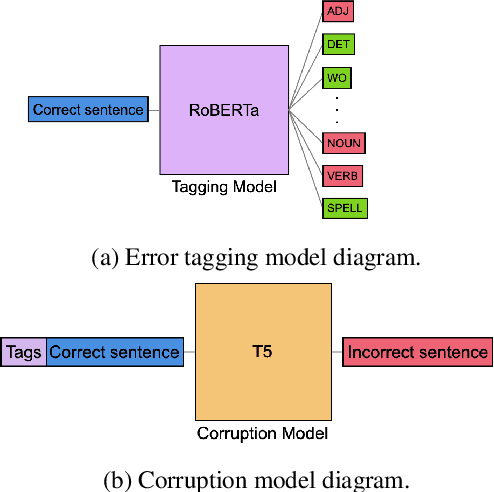

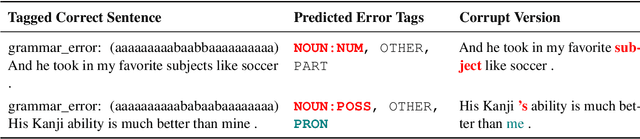

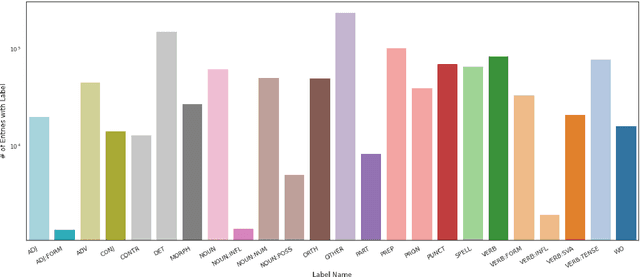

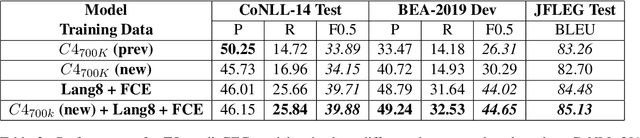

Data sparsity is a well-known problem for grammatical error correction (GEC). Generating synthetic training data is one widely proposed solution to this problem, and has allowed models to achieve state-of-the-art (SOTA) performance in recent years. However, these methods often generate unrealistic errors, or aim to generate sentences with only one error. We propose a learning based two stage method for synthetic data generation for GEC that relaxes this constraint on sentences containing only one error. Errors are generated in accordance with sentence merit. We show that a GEC model trained on our synthetically generated corpus outperforms models trained on synthetic data from prior work.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge