Joint Learning-Based Stabilization of Multiple Unknown Linear Systems

Paper and Code

Jan 01, 2022

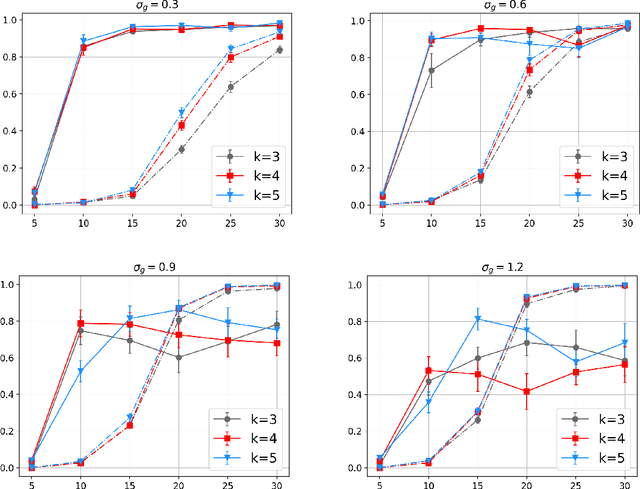

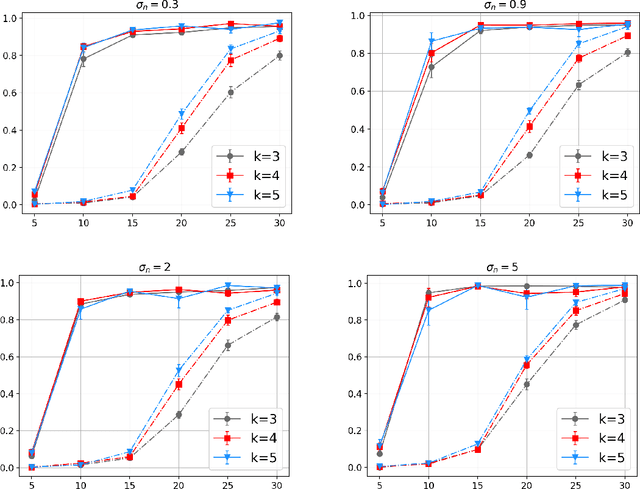

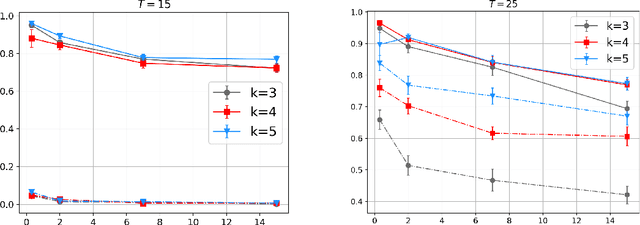

Learning-based control of linear systems received a lot of attentions recently. In popular settings, the true dynamical models are unknown to the decision-maker and need to be interactively learned by applying control inputs to the systems. Unlike the matured literature of efficient reinforcement learning policies for adaptive control of a single system, results on joint learning of multiple systems are not currently available. Especially, the important problem of fast and reliable joint-stabilization remains unaddressed and so is the focus of this work. We propose a novel joint learning-based stabilization algorithm for quickly learning stabilizing policies for all systems understudy, from the data of unstable state trajectories. The presented procedure is shown to be notably effective such that it stabilizes the family of dynamical systems in an extremely short time period.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge