Investigating the influence of noise and distractors on the interpretation of neural networks

Paper and Code

Nov 22, 2016

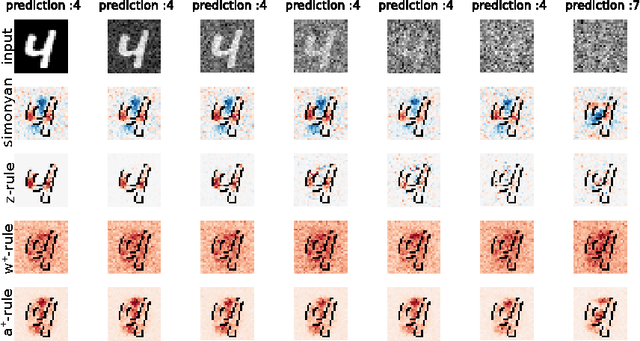

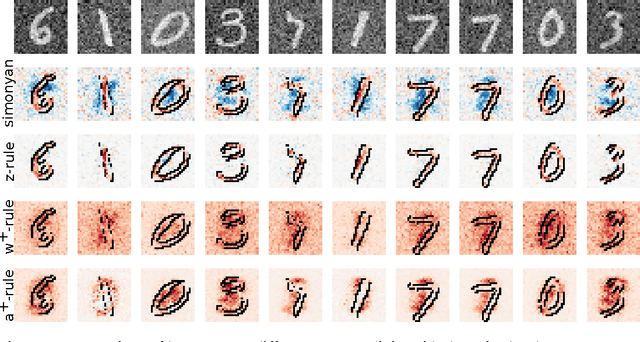

Understanding neural networks is becoming increasingly important. Over the last few years different types of visualisation and explanation methods have been proposed. However, none of them explicitly considered the behaviour in the presence of noise and distracting elements. In this work, we will show how noise and distracting dimensions can influence the result of an explanation model. This gives a new theoretical insights to aid selection of the most appropriate explanation model within the deep-Taylor decomposition framework.

* Presented at NIPS 2016 Workshop on Interpretable Machine Learning in

Complex Systems

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge