Intersectionality: Multiple Group Fairness in Expectation Constraints

Paper and Code

Nov 25, 2018

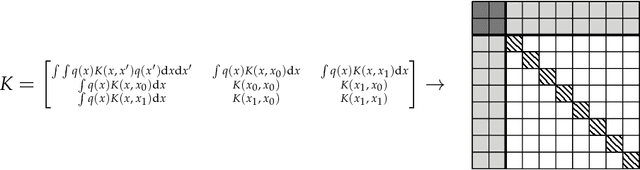

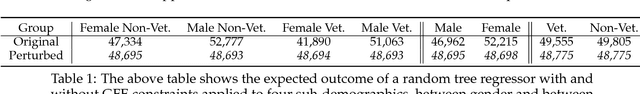

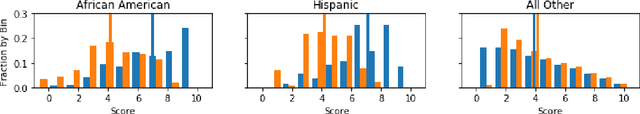

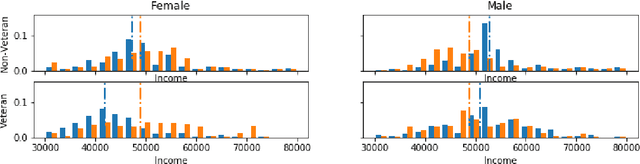

Group fairness is an important concern for machine learning researchers, developers, and regulators. However, the strictness to which models must be constrained to be considered fair is still under debate. The focus of this work is on constraining the expected outcome of subpopulations in kernel regression and, in particular, decision tree regression, with application to random forests, boosted trees and other ensemble models. While individual constraints were previously addressed, this work addresses concerns about incorporating multiple constraints simultaneously. The proposed solution does not affect the order of computational or memory complexity of the decision trees and is easily integrated into models post training.

* NeurIPS (previously NIPS) 2018, Workshop on Ethical, Social and

Governance Issues in AI

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge