Interpreting Medical Image Classifiers by Optimization Based Counterfactual Impact Analysis

Paper and Code

Apr 03, 2020

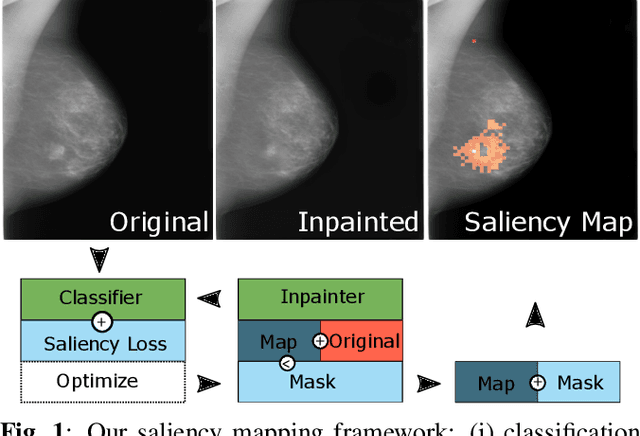

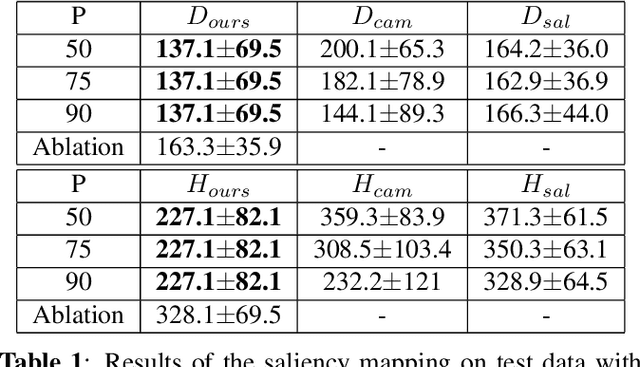

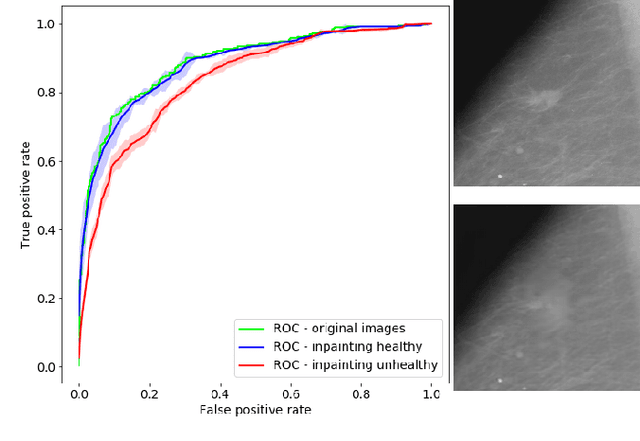

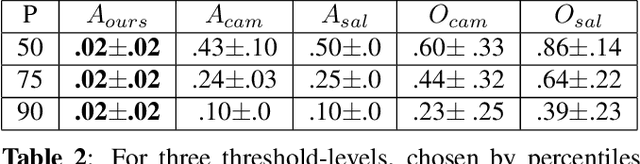

Clinical applicability of automated decision support systems depends on a robust, well-understood classification interpretation. Artificial neural networks while achieving class-leading scores fall short in this regard. Therefore, numerous approaches have been proposed that map a salient region of an image to a diagnostic classification. Utilizing heuristic methodology, like blurring and noise, they tend to produce diffuse, sometimes misleading results, hindering their general adoption. In this work we overcome these issues by presenting a model agnostic saliency mapping framework tailored to medical imaging. We replace heuristic techniques with a strong neighborhood conditioned inpainting approach, which avoids anatomically implausible artefacts. We formulate saliency attribution as a map-quality optimization task, enforcing constrained and focused attributions. Experiments on public mammography data show quantitatively and qualitatively more precise localization and clearer conveying results than existing state-of-the-art methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge