Interpreting Active Learning Methods Through Information Losses

Paper and Code

Feb 25, 2019

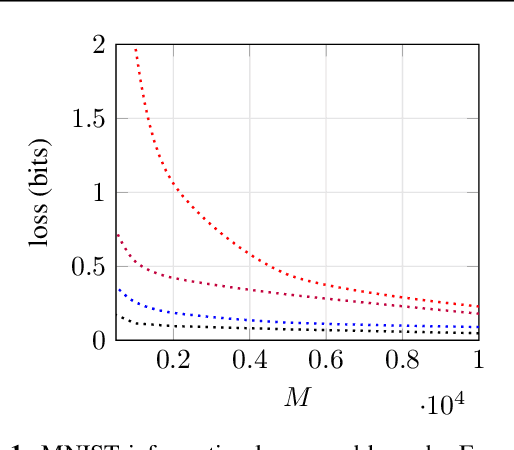

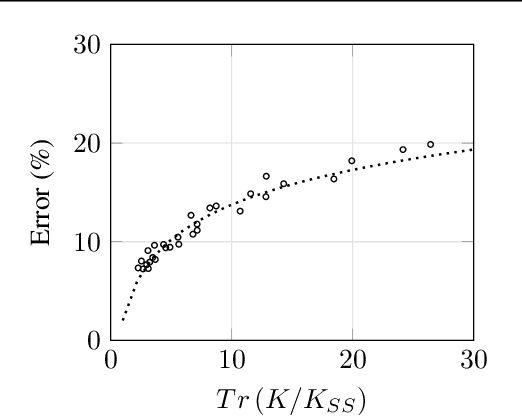

We propose a new way of interpreting active learning methods by analyzing the information `lost' upon sampling a random variable. We use some recent analytical developments of these losses to formally prove that facility location methods reduce these losses under mild assumptions, and to derive a new data dependent bound on information losses that can be used to evaluate other active learning methods. We show that this new bound is extremely tight to experiment, and further show that the bound has a decent predictive power for classification accuracy.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge