Interpretable Discovery in Large Image Data Sets

Paper and Code

Jun 21, 2018

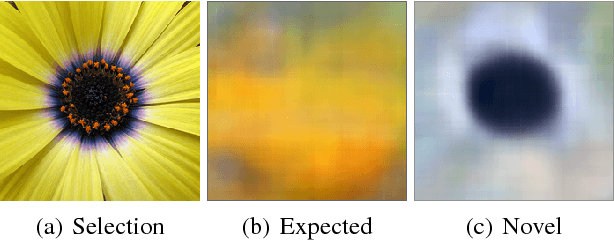

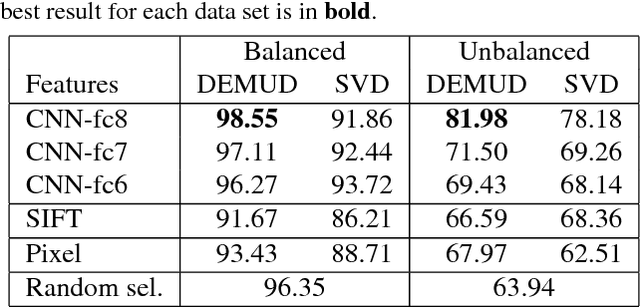

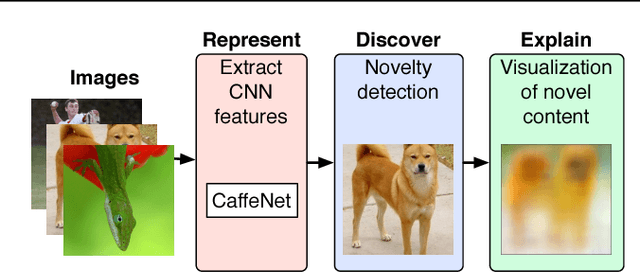

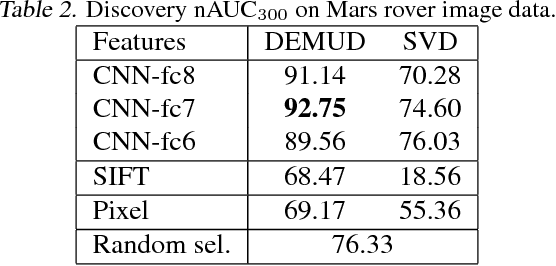

Automated detection of new, interesting, unusual, or anomalous images within large data sets has great value for applications from surveillance (e.g., airport security) to science (observations that don't fit a given theory can lead to new discoveries). Many image data analysis systems are turning to convolutional neural networks (CNNs) to represent image content due to their success in achieving high classification accuracy rates. However, CNN representations are notoriously difficult for humans to interpret. We describe a new strategy that combines novelty detection with CNN image features to achieve rapid discovery with interpretable explanations of novel image content. We applied this technique to familiar images from ImageNet as well as to a scientific image collection from planetary science.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge