Interleaving Learning, with Application to Neural Architecture Search

Paper and Code

Mar 12, 2021

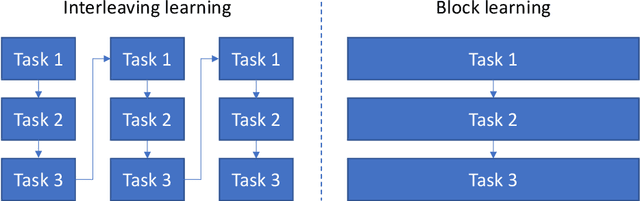

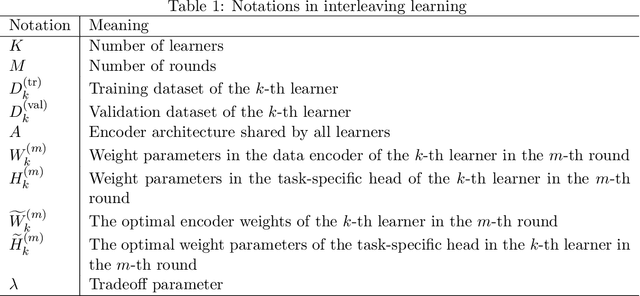

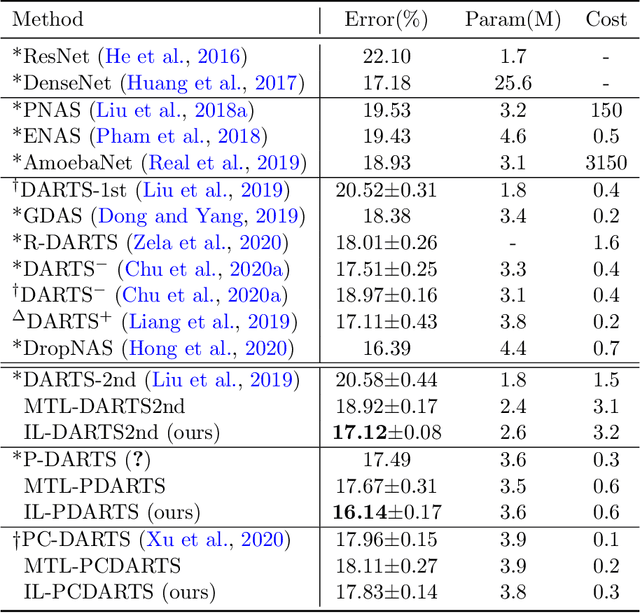

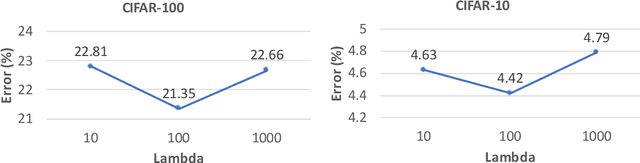

Interleaving learning is a human learning technique where a learner interleaves the studies of multiple topics, which increases long-term retention and improves ability to transfer learned knowledge. Inspired by the interleaving learning technique of humans, in this paper we explore whether this learning methodology is beneficial for improving the performance of machine learning models as well. We propose a novel machine learning framework referred to as interleaving learning (IL). In our framework, a set of models collaboratively learn a data encoder in an interleaving fashion: the encoder is trained by model 1 for a while, then passed to model 2 for further training, then model 3, and so on; after trained by all models, the encoder returns back to model 1 and is trained again, then moving to model 2, 3, etc. This process repeats for multiple rounds. Our framework is based on multi-level optimization consisting of multiple inter-connected learning stages. An efficient gradient-based algorithm is developed to solve the multi-level optimization problem. We apply interleaving learning to search neural architectures for image classification on CIFAR-10, CIFAR-100, and ImageNet. The effectiveness of our method is strongly demonstrated by the experimental results.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge