Intelligent Meta-Imagers: From Compressed to Learned Sensing

Paper and Code

Oct 28, 2021

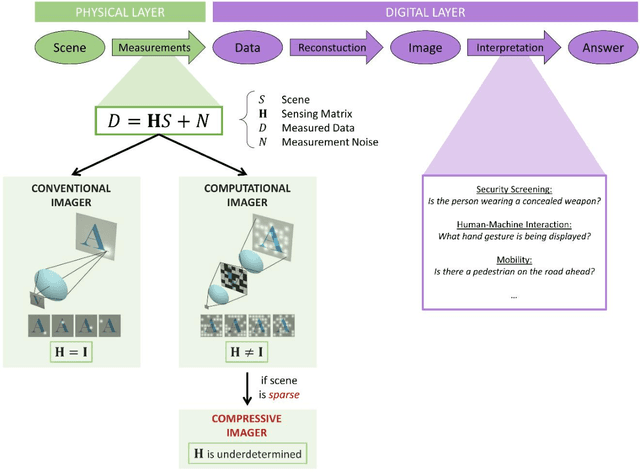

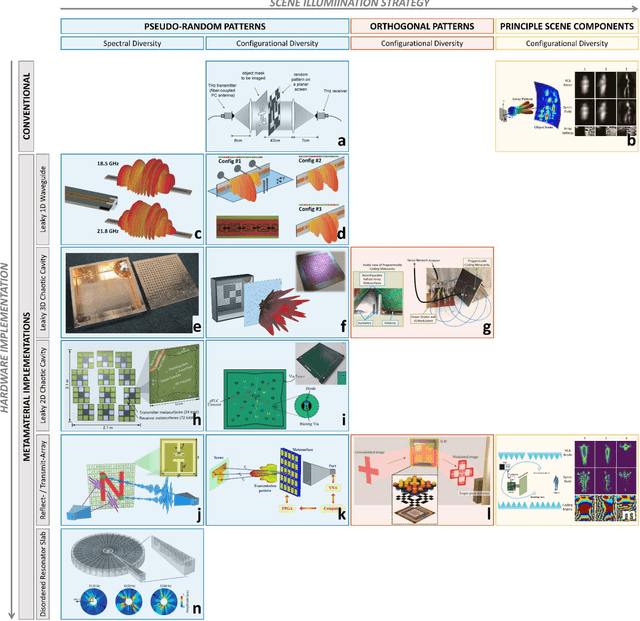

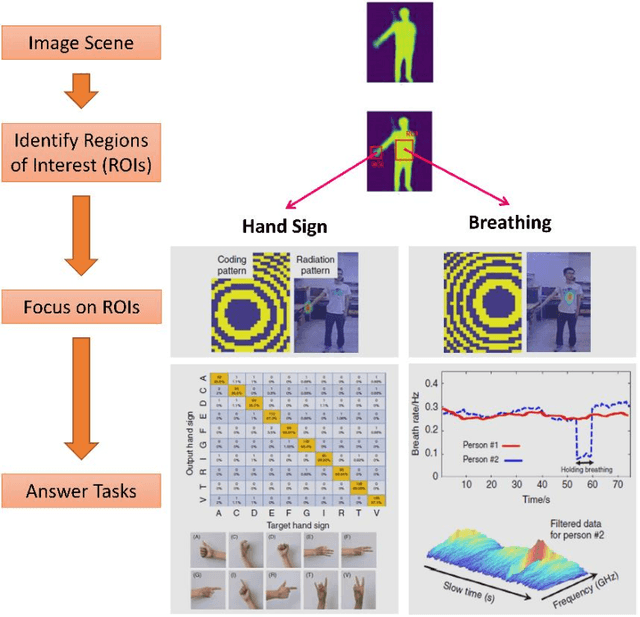

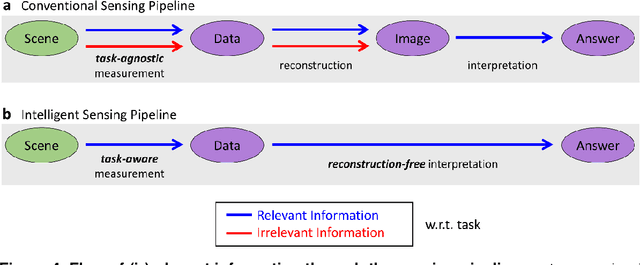

Computational meta-imagers synergize metamaterial hardware with advanced signal processing approaches such as compressed sensing. Recent advances in artificial intelligence (AI) are gradually reshaping the landscape of meta-imaging. Most recent works use AI for data analysis, but some also use it to program the physical meta-hardware. The role of "intelligence" in the measurement process and its implications for critical metrics like latency are often not immediately clear. Here, we comprehensively review the evolution of computational meta-imaging from the earliest frequency-diverse compressive systems to modern programmable intelligent meta-imagers. We introduce a clear taxonomy in terms of the flow of task-relevant information that has direct links to information theory: compressive meta-imagers indiscriminately acquire all scene information in a task-agnostic measurement process that aims at a near-isometric embedding; intelligent meta-imagers highlight task-relevant information in a task-aware measurement process that is purposefully non-isometric. We provide explicit design tutorials for the integration of programmable meta-atoms as trainable physical weights into an intelligent end-to-end sensing pipeline. This merging of the physical world of metamaterial engineering and the digital world of AI enables the remarkable latency gains of intelligent meta-imagers. We further outline emerging opportunities for cognitive meta-imagers with reverberation-enhanced resolution and we point out how the meta-imaging community can reap recent advances in the vibrant field of metamaterial wave processors to reach the holy grail of low-energy ultra-fast all-analog intelligent meta-sensors.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge