Integrative CAM: Adaptive Layer Fusion for Comprehensive Interpretation of CNNs

Paper and Code

Dec 02, 2024

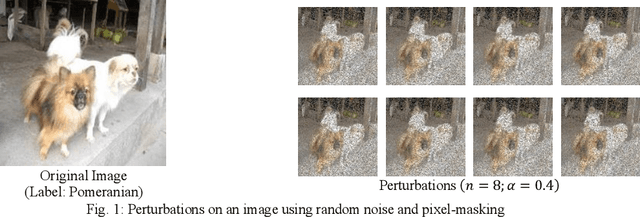

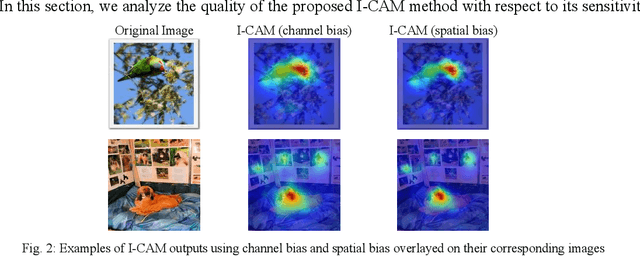

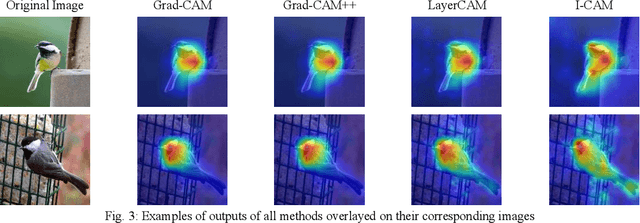

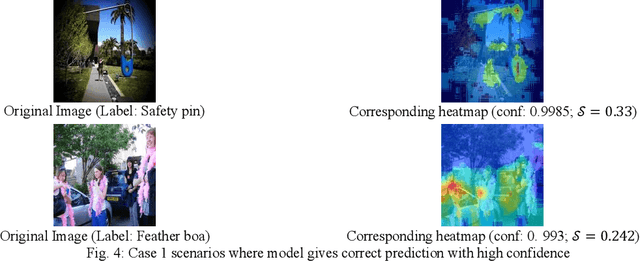

With the growing demand for interpretable deep learning models, this paper introduces Integrative CAM, an advanced Class Activation Mapping (CAM) technique aimed at providing a holistic view of feature importance across Convolutional Neural Networks (CNNs). Traditional gradient-based CAM methods, such as Grad-CAM and Grad-CAM++, primarily use final layer activations to highlight regions of interest, often neglecting critical features derived from intermediate layers. Integrative CAM addresses this limitation by fusing insights across all network layers, leveraging both gradient and activation scores to adaptively weight layer contributions, thus yielding a comprehensive interpretation of the model's internal representation. Our approach includes a novel bias term in the saliency map calculation, a factor frequently omitted in existing CAM techniques, but essential for capturing a more complete feature importance landscape, as modern CNNs rely on both weighted activations and biases to make predictions. Additionally, we generalize the alpha term from Grad-CAM++ to apply to any smooth function, expanding CAM applicability across a wider range of models. Through extensive experiments on diverse and complex datasets, Integrative CAM demonstrates superior fidelity in feature importance mapping, effectively enhancing interpretability for intricate fusion scenarios and complex decision-making tasks. By advancing interpretability methods to capture multi-layered model insights, Integrative CAM provides a valuable tool for fusion-driven applications, promoting the trustworthy and insightful deployment of deep learning models.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge