In Search of Probeable Generalization Measures

Paper and Code

Oct 23, 2021

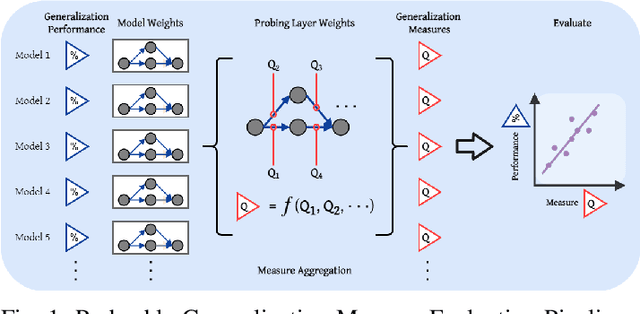

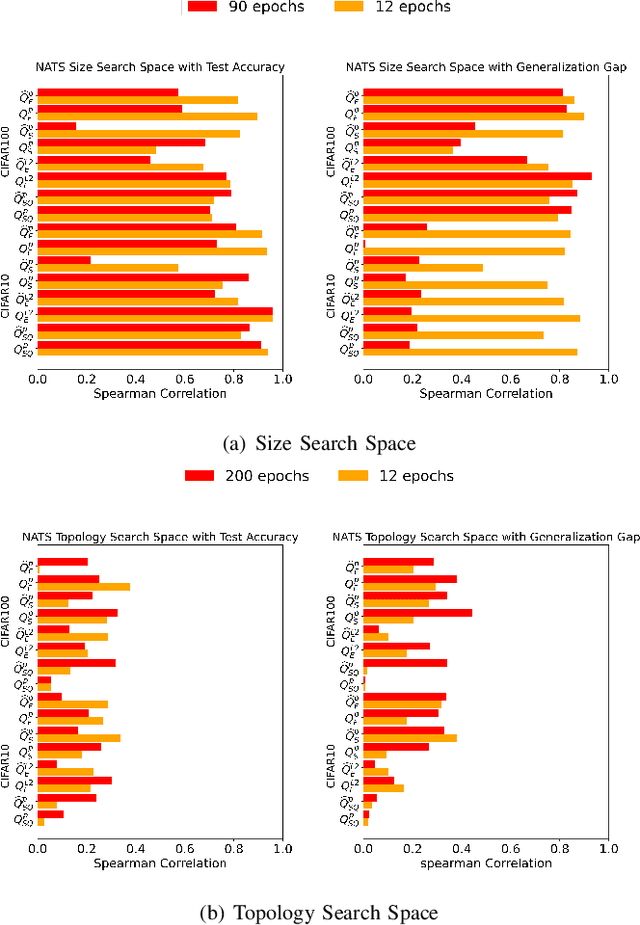

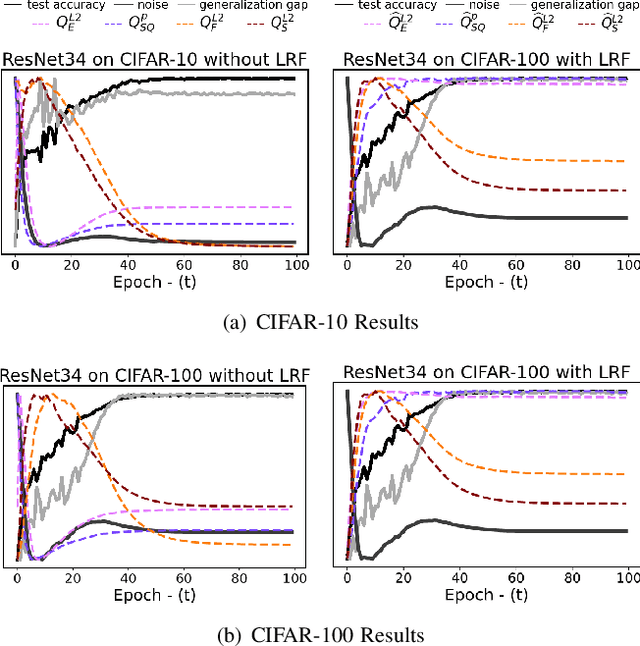

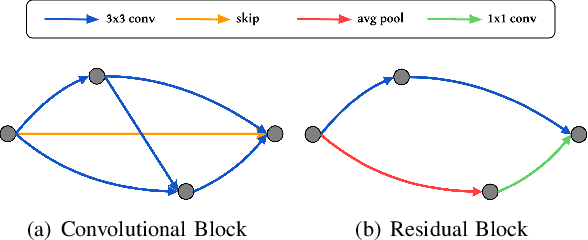

Understanding the generalization behaviour of deep neural networks is a topic of recent interest that has driven the production of many studies, notably the development and evaluation of generalization "explainability" measures that quantify model generalization ability. Generalization measures have also proven useful in the development of powerful layer-wise model tuning and optimization algorithms, though these algorithms require specific kinds of generalization measures which can probe individual layers. The purpose of this paper is to explore the neglected subtopic of probeable generalization measures; to establish firm ground for further investigations, and to inspire and guide the development of novel model tuning and optimization algorithms. We evaluate and compare measures, demonstrating effectiveness and robustness across model variations, dataset complexities, training hyperparameters, and training stages. We also introduce a new dataset of trained models and performance metrics, GenProb, for testing generalization measures, model tuning algorithms and optimization algorithms.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge