In Defense of Product Quantization

Paper and Code

Nov 23, 2017

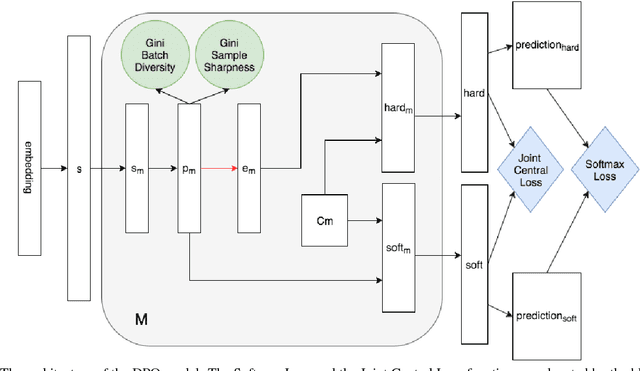

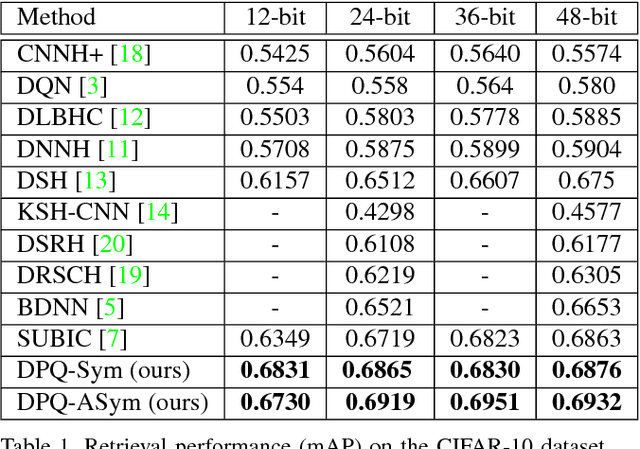

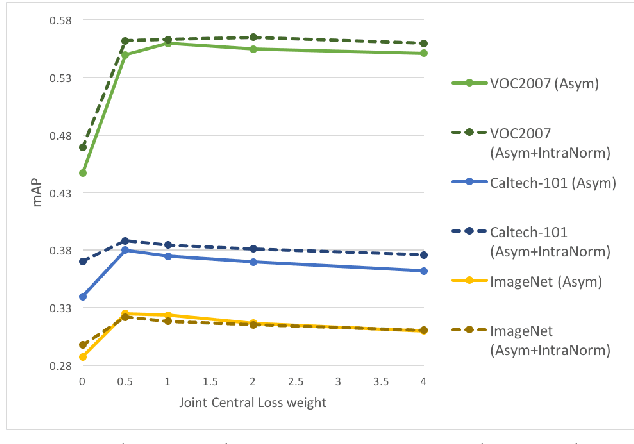

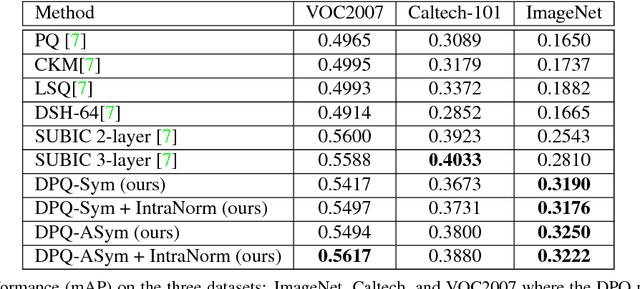

Despite their widespread adoption, Product Quantization techniques were recently shown to be inferior to other hashing techniques. In this work, we present an improved Deep Product Quantization (DPQ) technique that leads to more accurate retrieval and classification than the latest state of the art methods, while having similar computational complexity and memory footprint as the Product Quantization method. To our knowledge, this is the first work to introduce a representation that is inspired by Product Quantization and which is learned end-to-end, and thus benefits from the supervised signal. DPQ explicitly learns soft and hard representations to enable an efficient and accurate asymmetric search, by using a straight-through estimator. A novel loss function, Joint Central Loss, is introduced, which both improves the retrieval performance, and decreases the discrepancy between the soft and the hard representations. Finally, by using a normalization technique, we improve the results for cross-domain category retrieval.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge