Improving \textit{Tug-of-War} sketch using Control-Variates method

Paper and Code

Mar 04, 2022

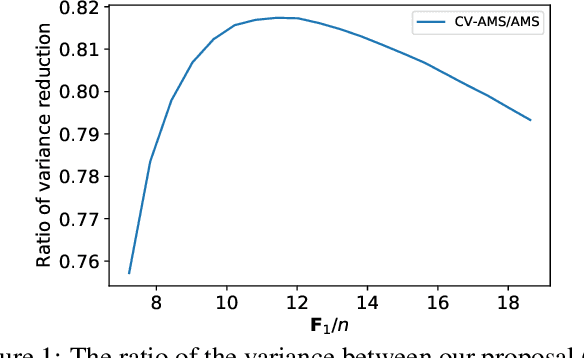

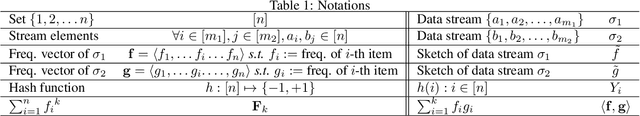

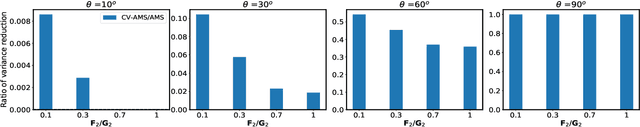

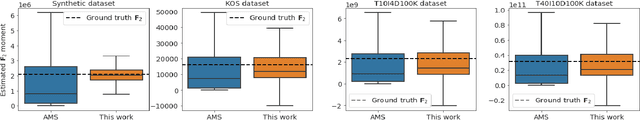

Computing space-efficient summary, or \textit{a.k.a. sketches}, of large data, is a central problem in the streaming algorithm. Such sketches are used to answer \textit{post-hoc} queries in several data analytics tasks. The algorithm for computing sketches typically requires to be fast, accurate, and space-efficient. A fundamental problem in the streaming algorithm framework is that of computing the frequency moments of data streams. The frequency moments of a sequence containing $f_i$ elements of type $i$, are the numbers $\mathbf{F}_k=\sum_{i=1}^n {f_i}^k,$ where $i\in [n]$. This is also called as $\ell_k$ norm of the frequency vector $(f_1, f_2, \ldots f_n).$ Another important problem is to compute the similarity between two data streams by computing the inner product of the corresponding frequency vectors. The seminal work of Alon, Matias, and Szegedy~\cite{AMS}, \textit{a.k.a. Tug-of-war} (or AMS) sketch gives a randomized sublinear space (and linear time) algorithm for computing the frequency moments, and the inner product between two frequency vectors corresponding to the data streams. However, the variance of these estimates typically tends to be large. In this work, we focus on minimizing the variance of these estimates. We use the techniques from the classical Control-Variate method~\cite{Lavenberg} which is primarily known for variance reduction in Monte-Carlo simulations, and as a result, we are able to obtain significant variance reduction, at the cost of a little computational overhead. We present a theoretical analysis of our proposal and complement it with supporting experiments on synthetic as well as real-world datasets.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge