Improving Robotic Grasping on Monocular Images Via Multi-Task Learning and Positional Loss

Paper and Code

Nov 05, 2020

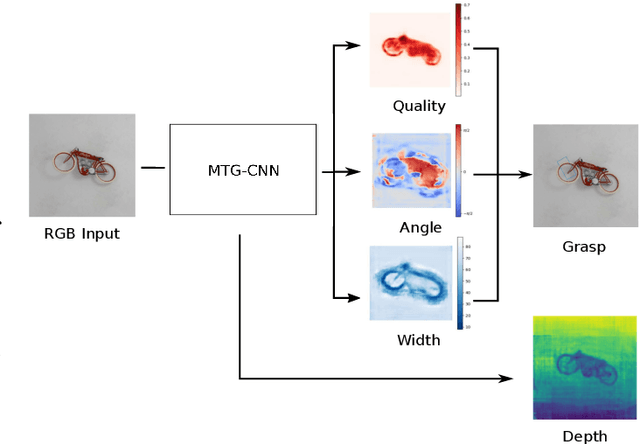

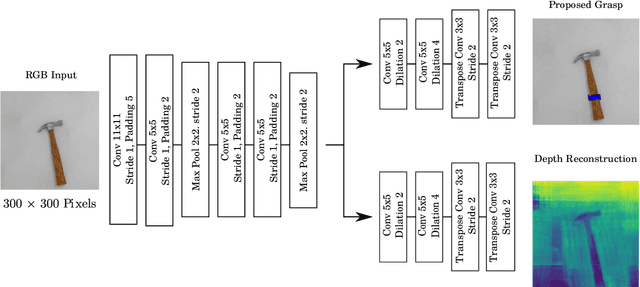

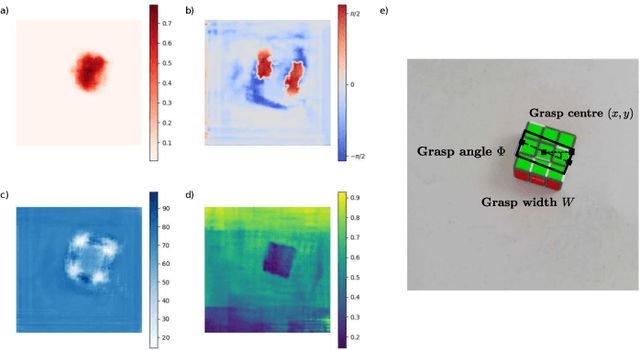

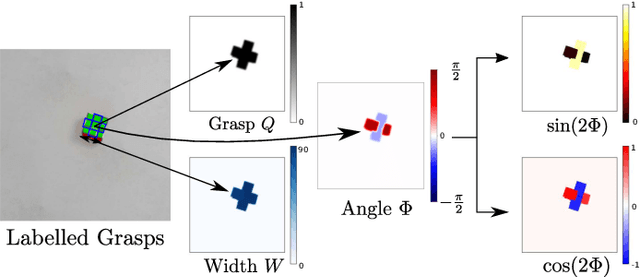

In this paper, we introduce two methods of improving real-time object grasping performance from monocular colour images in an end-to-end CNN architecture. The first is the addition of an auxiliary task during model training (multi-task learning). Our multi-task CNN model improves grasping performance from a baseline average of 72.04% to 78.14% on the large Jacquard grasping dataset when performing a supplementary depth reconstruction task. The second is introducing a positional loss function that emphasises loss per pixel for secondary parameters (gripper angle and width) only on points of an object where a successful grasp can take place. This increases performance from a baseline average of 72.04% to 78.92% as well as reducing the number of training epochs required. These methods can be also performed in tandem resulting in a further performance increase to 79.12% while maintaining sufficient inference speed to afford real-time grasp processing.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge