Improved Detection of Adversarial Images Using Deep Neural Networks

Paper and Code

Jul 10, 2020

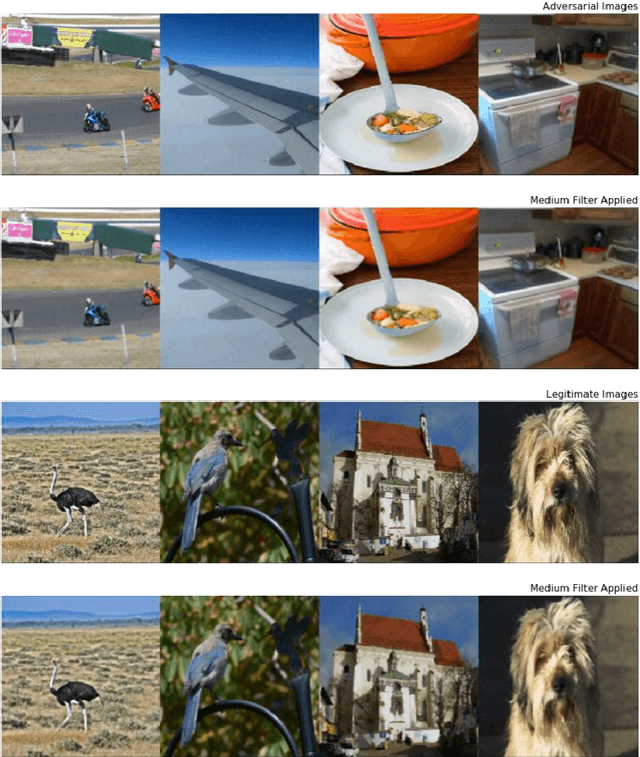

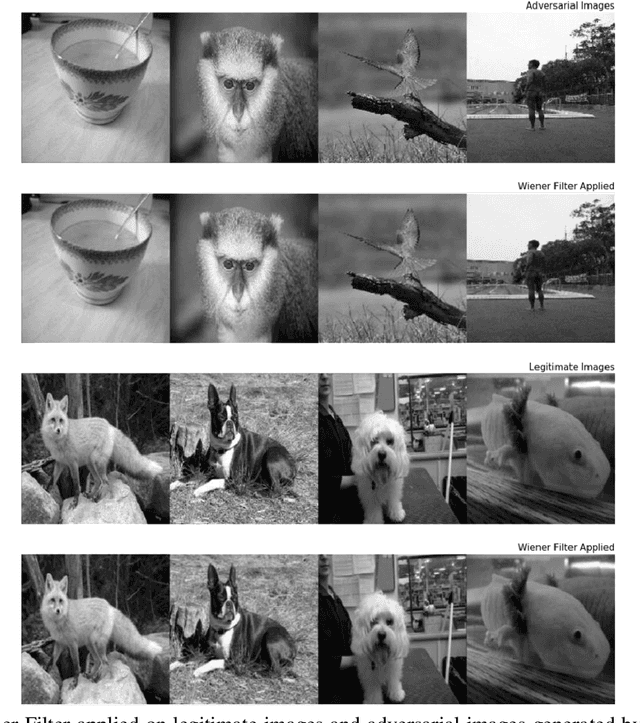

Machine learning techniques are immensely deployed in both industry and academy. Recent studies indicate that machine learning models used for classification tasks are vulnerable to adversarial examples, which limits the usage of applications in the fields with high precision requirements. We propose a new approach called Feature Map Denoising to detect the adversarial inputs and show the performance of detection on the mixed dataset consisting of adversarial examples generated by different attack algorithms, which can be used to associate with any pre-trained DNNs at a low cost. Wiener filter is also introduced as the denoise algorithm to the defense model, which can further improve performance. Experimental results indicate that good accuracy of detecting the adversarial examples can be achieved through our Feature Map Denoising algorithm.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge