Image-to-Height Domain Translation for Synthetic Aperture Sonar

Paper and Code

Dec 12, 2021

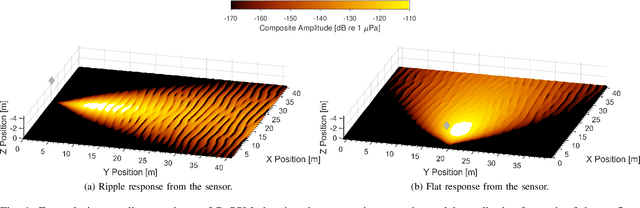

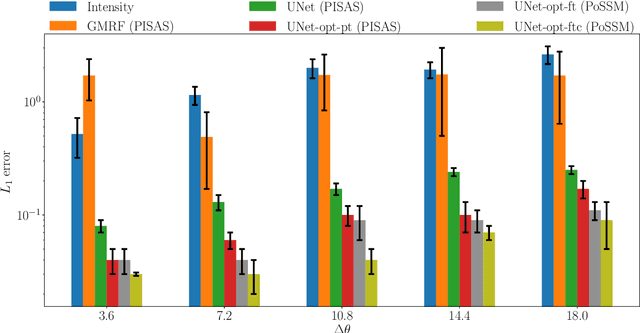

Observations of seabed texture with synthetic aperture sonar are dependent upon several factors. In this work, we focus on collection geometry with respect to isotropic and anisotropic textures. The low grazing angle of the collection geometry, combined with orientation of the sonar path relative to anisotropic texture, poses a significant challenge for image-alignment and other multi-view scene understanding frameworks. We previously proposed using features captured from estimated seabed relief to improve scene understanding. While several methods have been developed to estimate seabed relief via intensity, no large-scale study exists in the literature. Furthermore, a dataset of coregistered seabed relief maps and sonar imagery is nonexistent to learn this domain translation. We address these problems by producing a large simulated dataset containing coregistered pairs of seabed relief and intensity maps from two unique sonar data simulation techniques. We apply three types of models, with varying complexity, to translate intensity imagery to seabed relief: a Gaussian Markov Random Field approach (GMRF), a conditional Generative Adversarial Network (cGAN), and UNet architectures. Methods are compared in reference to the coregistered simulated datasets using L1 error. Additionally, predictions on simulated and real SAS imagery are shown. Finally, models are compared on two datasets of hand-aligned SAS imagery and evaluated in terms of L1 error across multiple aspects in comparison to using intensity. Our comprehensive experiments show that the proposed UNet architectures outperform the GMRF and pix2pix cGAN models on seabed relief estimation for simulated and real SAS imagery.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge