Image-based Virtual Fitting Room

Paper and Code

Apr 08, 2021

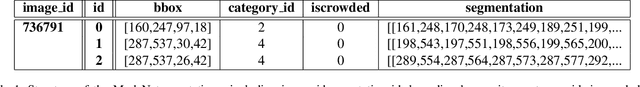

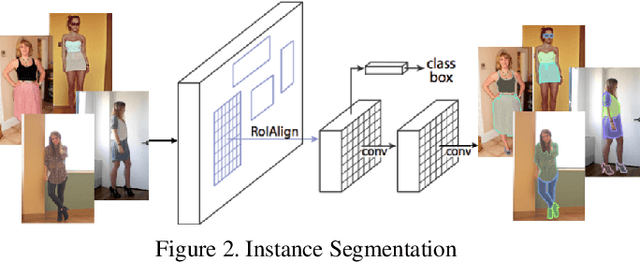

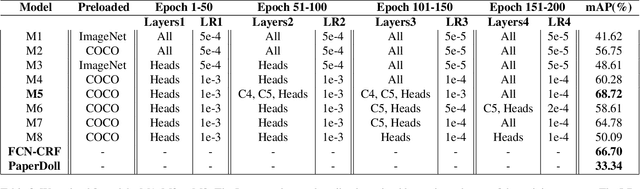

Virtual fitting room is a challenging task yet useful feature for e-commerce platforms and fashion designers. Existing works can only detect very few types of fashion items. Besides they did poorly in changing the texture and style of the selected fashion items. In this project, we propose a novel approach to address this problem. We firstly used Mask R-CNN to find the regions of different fashion items, and secondly used Neural Style Transfer to change the style of the selected fashion items. The dataset we used is composed of images from PaperDoll dataset and annotations provided by eBay's ModaNet. We trained 8 models and our best model massively outperformed baseline models both quantitatively and qualitatively, with 68.72% mAP, 0.2% ASDR.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge