IF-GAN: A Novel Generator Architecture with Information Feedback

Paper and Code

Oct 18, 2022

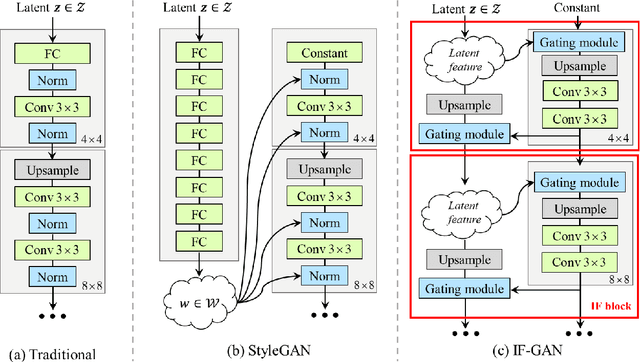

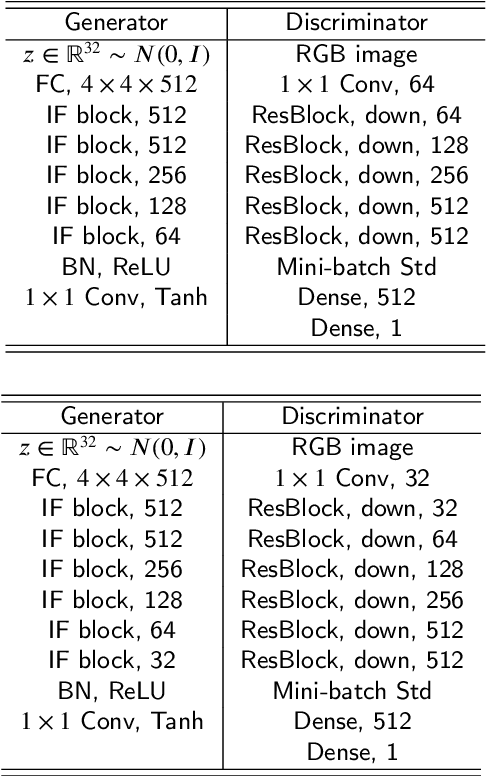

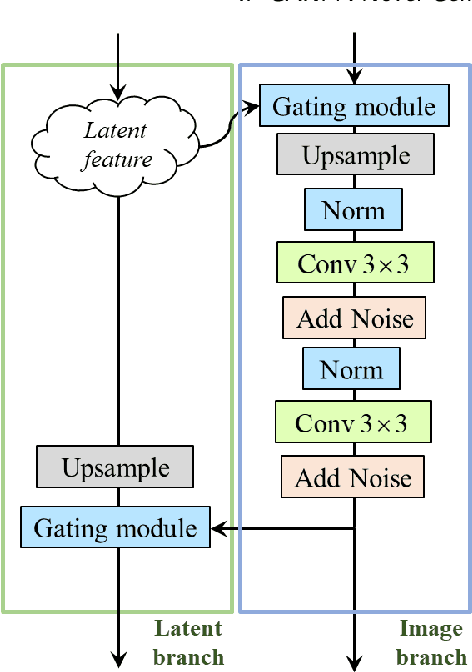

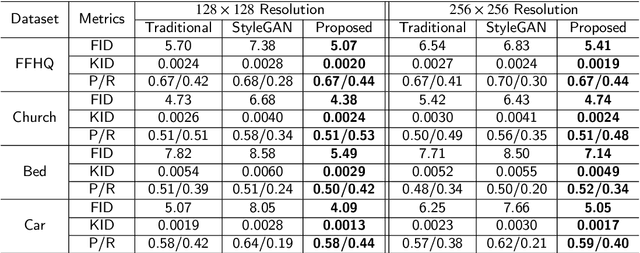

This paper presents an alternative generator architecture for image generation, having a novel information feedback system. Contrary to conventional methods in which the latent space unilaterally affects the feature space in the generator, the proposed method trains not only the feature space but also the latent one by interchanging their information. To this end, we introduce a novel module, called information feedback (IF) block, which jointly updates the latent and feature spaces. To show the superiority of the proposed method, we present extensive experiments on various datasets including subsets of LSUN and FFHQ. Experimental results reveal that the proposed method can dramatically improve the image generation performance, in terms of Frechet inception distance (FID), kernel inception distance (KID), and Precision and Recall (P & R).

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge