Identifying the Most Explainable Classifier

Paper and Code

Oct 22, 2019

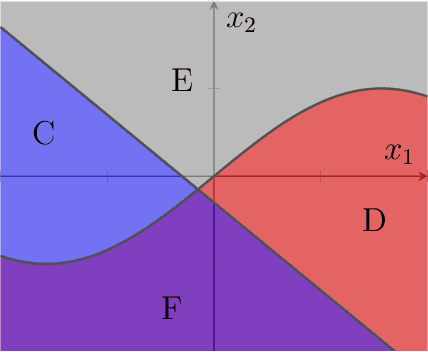

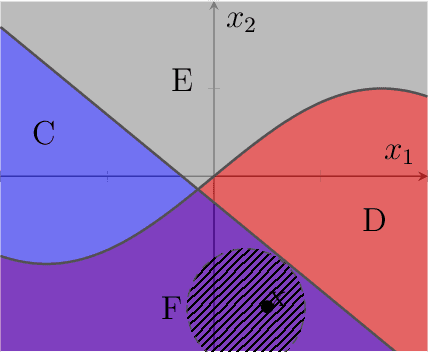

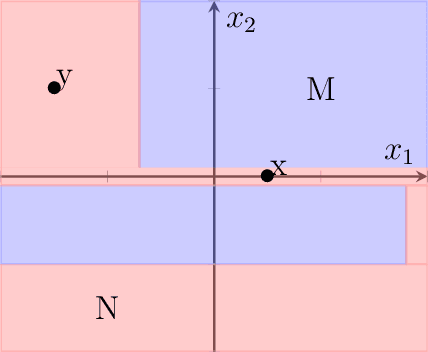

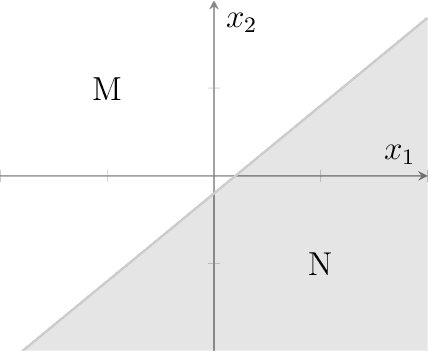

We introduce the notion of pointwise coverage to measure the explainability properties of machine learning classifiers. An explanation for a prediction is a definably simple region of the feature space sharing the same label as the prediction, and the coverage of an explanation measures its size or generalizability. With this notion of explanation, we investigate whether or not there is a natural characterization of the most explainable classifier. According with our intuitions, we prove that the binary linear classifier is uniquely the most explainable classifier up to negligible sets.

* 13 pages

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge