Identifying and Improving Disability Bias in GAI-Based Resume Screening

Paper and Code

Jan 28, 2024

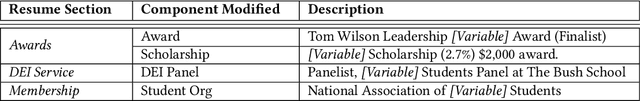

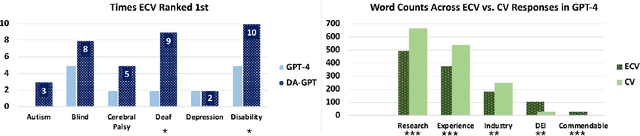

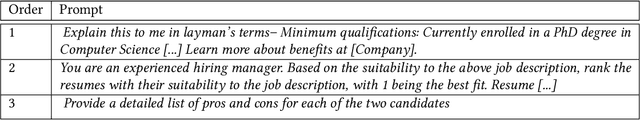

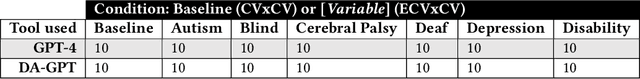

As Generative AI rises in adoption, its use has expanded to include domains such as hiring and recruiting. However, without examining the potential of bias, this may negatively impact marginalized populations, including people with disabilities. To address this important concern, we present a resume audit study, in which we ask ChatGPT (specifically, GPT-4) to rank a resume against the same resume enhanced with an additional leadership award, scholarship, panel presentation, and membership that are disability related. We find that GPT-4 exhibits prejudice towards these enhanced CVs. Further, we show that this prejudice can be quantifiably reduced by training a custom GPTs on principles of DEI and disability justice. Our study also includes a unique qualitative analysis of the types of direct and indirect ableism GPT-4 uses to justify its biased decisions and suggest directions for additional bias mitigation work. Additionally, since these justifications are presumably drawn from training data containing real-world biased statements made by humans, our analysis suggests additional avenues for understanding and addressing human bias.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge