I Only Have Eyes for You: The Impact of Masks On Convolutional-Based Facial Expression Recognition

Paper and Code

Apr 16, 2021

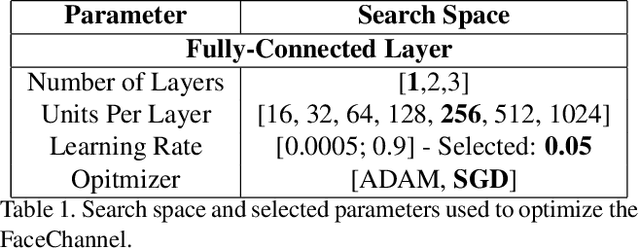

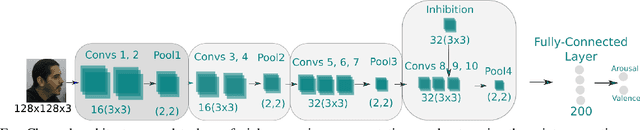

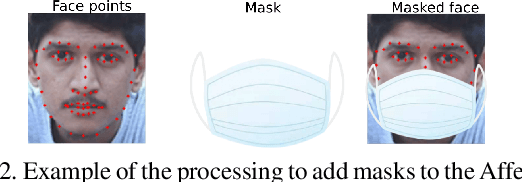

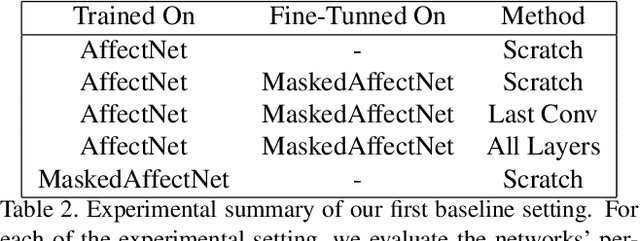

The current COVID-19 pandemic has shown us that we are still facing unpredictable challenges in our society. The necessary constrain on social interactions affected heavily how we envision and prepare the future of social robots and artificial agents in general. Adapting current affective perception models towards constrained perception based on the hard separation between facial perception and affective understanding would help us to provide robust systems. In this paper, we perform an in-depth analysis of how recognizing affect from persons with masks differs from general facial expression perception. We evaluate how the recently proposed FaceChannel adapts towards recognizing facial expressions from persons with masks. In Our analysis, we evaluate different training and fine-tuning schemes to understand better the impact of masked facial expressions. We also perform specific feature-level visualization to demonstrate how the inherent capabilities of the FaceChannel to learn and combine facial features change when in a constrained social interaction scenario.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge