"I Don't Think So": Disagreement-Based Policy Summaries for Comparing Agents

Paper and Code

Feb 05, 2021

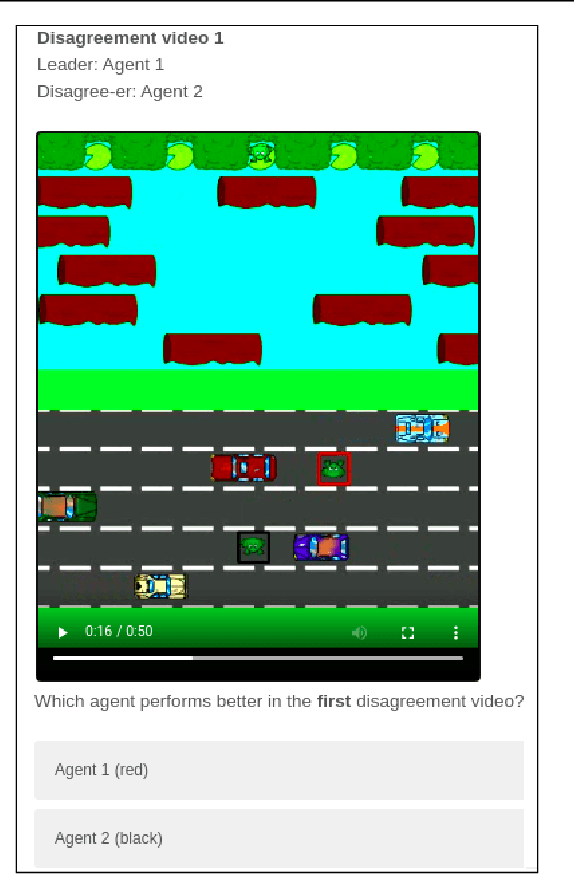

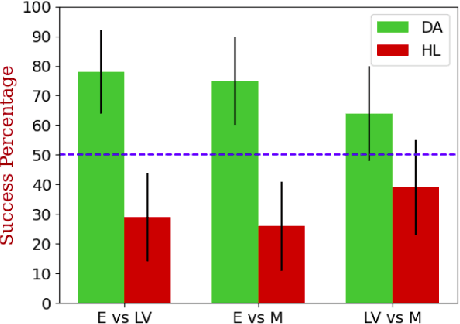

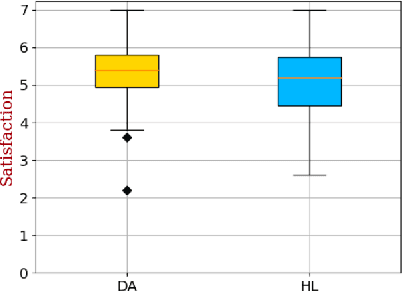

With Artificial Intelligence on the rise, human interaction with autonomous agents becomes more frequent. Effective human-agent collaboration requires that the human understands the agent's behavior, as failing to do so may lead to reduced productiveness, misuse, frustration and even danger. Agent strategy summarization methods are used to describe the strategy of an agent to its destined user through demonstration. The summary's purpose is to maximize the user's understanding of the agent's aptitude by showcasing its behaviour in a set of world states, chosen by some importance criteria. While shown to be useful, we show that these methods are limited in supporting the task of comparing agent behavior, as they independently generate a summary for each agent. In this paper, we propose a novel method for generating contrastive summaries that highlight the differences between agent's policies by identifying and ranking states in which the agents disagree on the best course of action. We conduct a user study in which participants face an agent selection task. Our results show that the novel disagreement-based summaries lead to improved user performance compared to summaries generated using HIGHLIGHTS, a previous strategy summarization algorithm.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge