Hypothesis Testing using Causal and Causal Variational Generative Models

Paper and Code

Oct 20, 2022

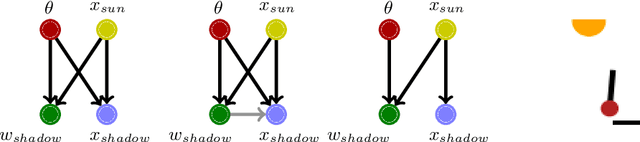

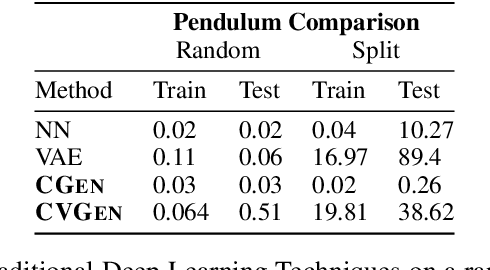

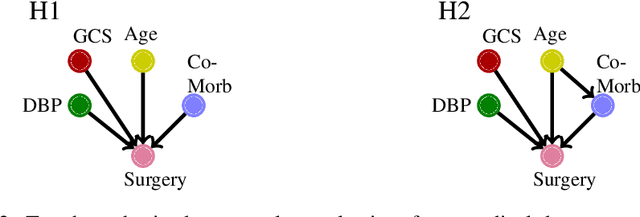

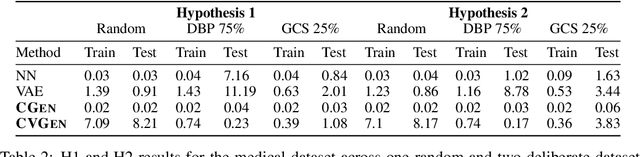

Hypothesis testing and the usage of expert knowledge, or causal priors, has not been well explored in the context of generative models. We propose a novel set of generative architectures, Causal Gen and Causal Variational Gen, that can utilize nonparametric structural causal knowledge combined with a deep learning functional approximation. We show how, using a deliberate (non-random) split of training and testing data, these models can generalize better to similar, but out-of-distribution data points, than non-causal generative models and prediction models such as Variational autoencoders and Fully Connected Neural Networks. We explore using this generalization error as a proxy for causal model hypothesis testing. We further show how dropout can be used to learn functional relationships of structural models that are difficult to learn with traditional methods. We validate our methods on a synthetic pendulum dataset, as well as a trauma surgery ground level fall dataset.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge