Human Whole-Body Dynamics Estimation for Enhancing Physical Human-Robot Interaction

Paper and Code

Dec 03, 2019

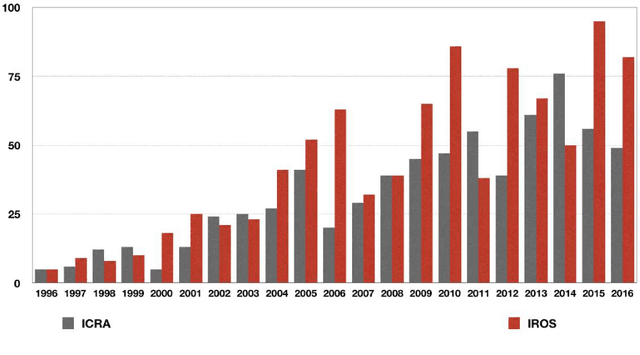

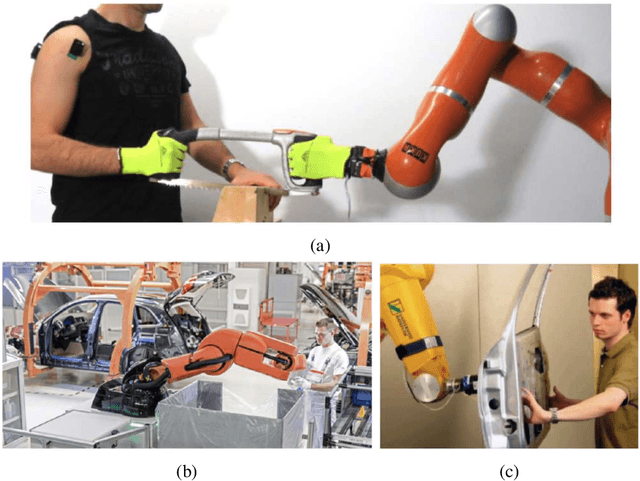

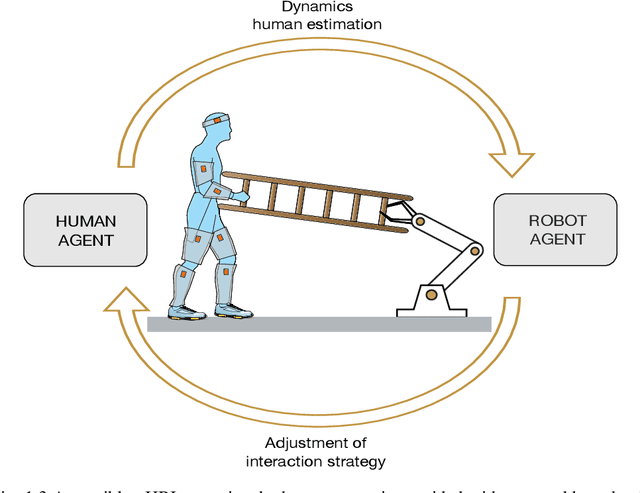

In the last two decades the scientific community has shown a great interest in understanding and shaping the interaction mechanisms between humans and robots. The interaction implies communication between two dyadic agents and, if the type of interaction is physical, the communication is represented by the set of forces exchanged during the interaction. Within this context, the role of quantifying these forces becomes of pivotal importance for understanding the interaction mechanisms. At the current scientific stage, classical robots are built to act for humans, but the scientific demand is going towards the direction of robots that will have to collaborate with humans. This will be possible by providing the robots with sufficient pieces of information of the agent they are interacting with (i.e., kinematic and dynamic model of the human). In a modern age where humans need the help of robots, apparently in an opposite trend, this thesis attempts to answer the following questions: Do robots need humans? Should robots know their human partners? A tentative answer is provided here in the form of a novel framework for the simultaneous human whole-body motion tracking and dynamics estimation, in a real-time scenario. The framework encompasses a set of body-mounted sensors and a probabilistic algorithm able of estimating physical quantities that in humans are not directly measurable (i.e., torques and internal forces). This thesis is mainly focussed on the paramount role in retrieving the human dynamics estimation but straightforwardly it leaves the door open to the next development step: passing the human dynamics feedback to the robot controllers. This step will enable the robot with the capability to observe and understand the human partner by generating an enhanced (intentional) interaction.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge