How to Defend Against Large-scale Model Poisoning Attacks in Federated Learning: A Vertical Solution

Paper and Code

Nov 16, 2024

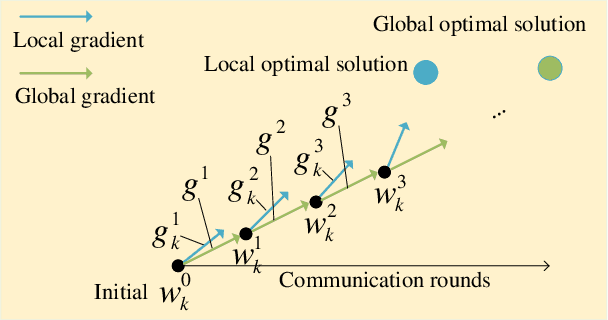

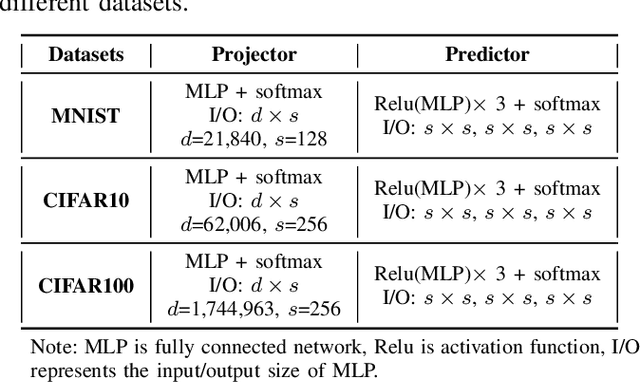

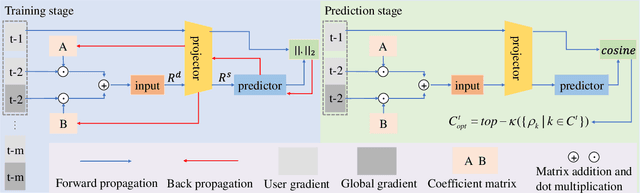

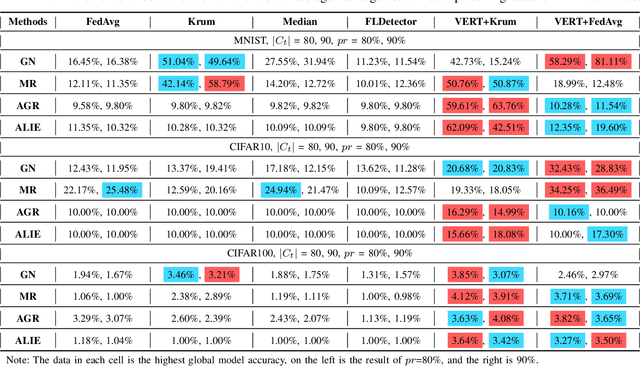

Federated learning (FL) is vulnerable to model poisoning attacks due to its distributed nature. The current defenses start from all user gradients (model updates) in each communication round and solve for the optimal aggregation gradients (horizontal solution). This horizontal solution will completely fail when facing large-scale (>50%) model poisoning attacks. In this work, based on the key insight that the convergence process of the model is a highly predictable process, we break away from the traditional horizontal solution of defense and innovatively transform the problem of solving the optimal aggregation gradients into a vertical solution problem. We propose VERT, which uses global communication rounds as the vertical axis, trains a predictor using historical gradients information to predict user gradients, and compares the similarity with actual user gradients to precisely and efficiently select the optimal aggregation gradients. In order to reduce the computational complexity of VERT, we design a low dimensional vector projector to project the user gradients to a computationally acceptable length, and then perform subsequent predictor training and prediction tasks. Exhaustive experiments show that VERT is efficient and scalable, exhibiting excellent large-scale (>=80%) model poisoning defense effects under different FL scenarios. In addition, we can design projector with different structures for different model structures to adapt to aggregation servers with different computing power.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge