How Human is Human Evaluation? Improving the Gold Standard for NLG with Utility Theory

Paper and Code

May 24, 2022

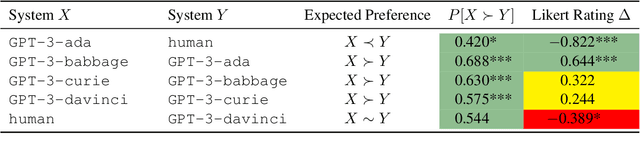

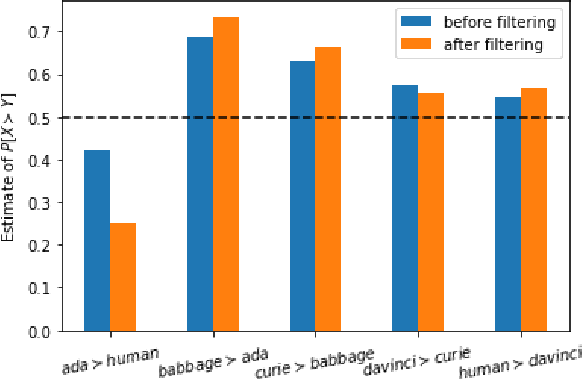

Human ratings are treated as the gold standard in NLG evaluation. The standard protocol is to collect ratings of generated text, average across annotators, and then rank NLG systems by their average scores. However, little consideration has been given as to whether this approach faithfully captures human preferences. In this work, we analyze this standard protocol through the lens of utility theory in economics. We first identify the implicit assumptions it makes about annotators and find that these assumptions are often violated in practice, in which case annotator ratings become an unfaithful reflection of their preferences. The most egregious violations come from using Likert scales, which provably reverse the direction of the true preference in certain cases. We suggest improvements to the standard protocol to make it more theoretically sound, but even in its improved form, it cannot be used to evaluate open-ended tasks like story generation. For the latter, we propose a new evaluation protocol called $\textit{system-level probabilistic assessment}$ (SPA). In our experiments, we find that according to SPA, annotators prefer larger GPT-3 variants to smaller ones -- as expected -- with all comparisons being statistically significant. In contrast, the standard protocol only yields significant results half the time.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge