How Far Can Fairness Constraints Help Recover From Biased Data?

Paper and Code

Dec 16, 2023

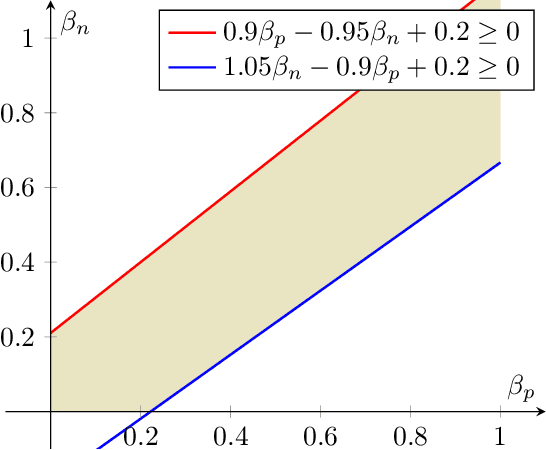

Blum & Stangl (2019) propose a data bias model to simulate under-representation and label bias in underprivileged population. For a stylized data distribution with i.i.d. label noise, under certain simple conditions on the bias parameters, they show that fair classification with equal opportunity constraints even on extremely biased distribution can recover an optimally accurate and fair classifier on the original distribution. Although their distribution is stylized, their result is interesting because it demonstrates that fairness constraints can implicitly rectify data bias and simultaneously overcome a perceived fairness-accuracy trade-off. In this paper, we give an alternate proof of their result using threshold-based characterization of optimal fair classifiers. Moreover, we show that their conditions on the bias parameters are both necessary and sufficient for their recovery result. Our technique is arguably more flexible, as it readily extends to more general distributions, e.g., when the labels in the original distribution have Massart noise instead of i.i.d. noise. Finally, we prove that for any data distribution, if the optimally accurate classifier in a hypothesis class is fair and robust, then it can be recovered through fair classification on the biased distribution, whenever the bias parameters satisfy certain simple conditions.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge