How Do Humans Write Code? Large Models Do It the Same Way Too

Paper and Code

Feb 24, 2024

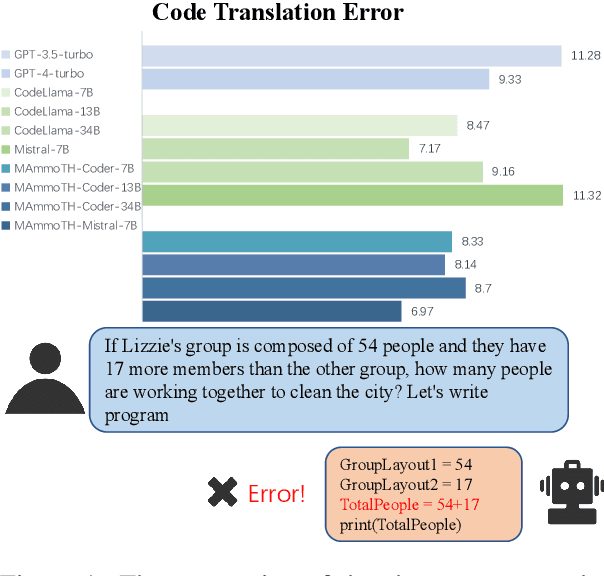

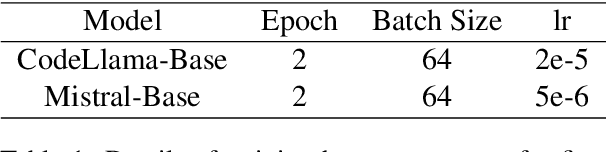

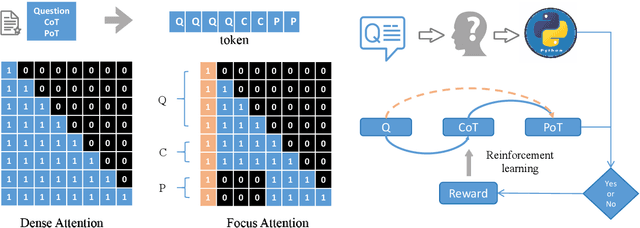

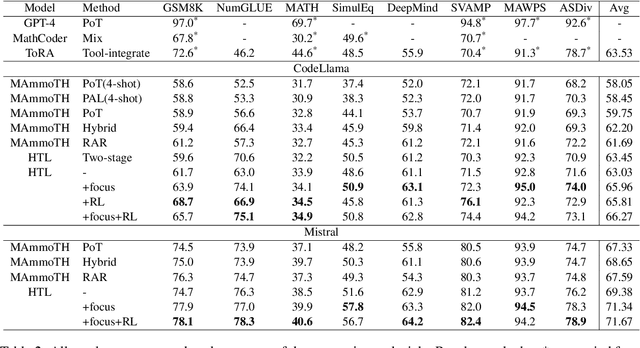

Large Language Models (LLMs) often make errors when performing numerical calculations. In contrast to traditional chain-of-thought reasoning, the program-of-thoughts approach involves generating executable code to solve problems. By executing this code, it achieves more precise results. Using generated executable code instead of natural language can reduce computational errors. However, we observe that when LLMs solve mathematical problems using code, they tend to generate more incorrect reasoning than when using natural language. To address this issue, we propose Human-Think Language (HTL), a straightforward yet highly efficient approach inspired by human coding practices. The approach first generates problem-solving methods described in the natural language by the model, then converts them into code, mirroring the process where people think through the logic in natural language before writing it as code. Additionally, it utilizes the Proximal Policy Optimization (PPO) algorithm, enabling it to provide feedback to itself based on the correctness of mathematical answers, much like humans do. Finally, we introduce a focus-attention mechanism that masks the question segment, enhancing its reliance on natural language inference solutions during code generation. We conduct our experiments without introducing any additional information, and the results across five mathematical calculation datasets showcase the effectiveness of our approach. Notably, on the NumGLUE dataset, the LlaMA-2-7B-based model achieves a superior performance rate (75.1%) compared to the previous best performance with the LlaMA-2-70B model (74.4%).

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge