Guiding Theorem Proving by Recurrent Neural Networks

Paper and Code

May 20, 2019

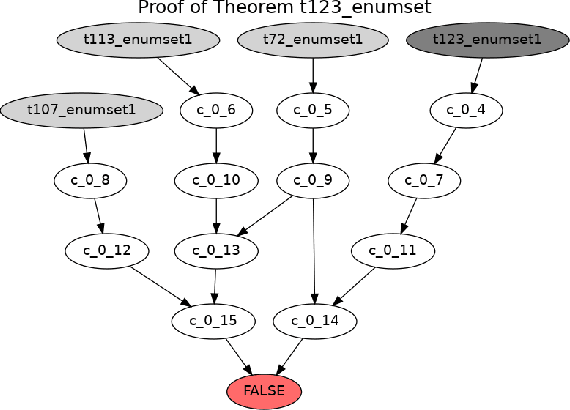

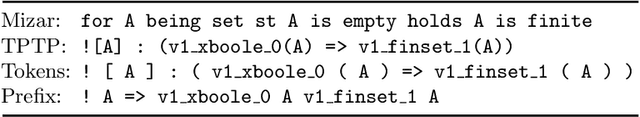

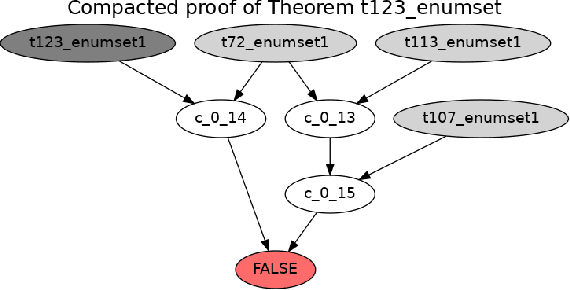

We describe two theorem proving tasks -- premise selection and internal guidance -- for which machine learning has been recently used with some success. We argue that the existing methods however do not correspond to the way how humans approach these tasks. In particular, the existing methods so far lack the notion of a state that is updated each time a choice in the reasoning process is made. To address that, we propose an analogy with tasks such as machine translation, where stateful architectures such as recurrent neural networks have been recently very successful. Then we develop and publish a series of sequence-to-sequence data sets that correspond to the theorem proving tasks using several encodings, and provide the first experimental evaluation of the performance of recurrent neural networks on such tasks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge