Group Invariant Dictionary Learning

Paper and Code

Jul 15, 2020

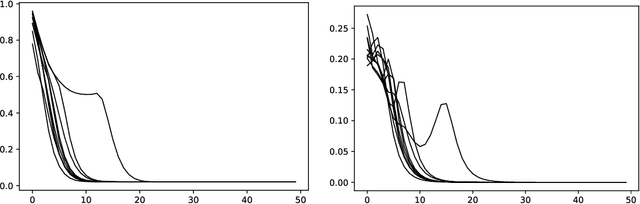

The dictionary learning problem concerns the task of representing data as sparse linear sums drawn from a smaller collection of basic building blocks. In application domains where such techniques are deployed, we frequently encounter datasets where some form of symmetry or invariance is present. Based on this observation, it is natural to learn dictionaries where such symmetries are also respected. In this paper, we develop a framework for learning dictionaries for data under the constraint that the collection of basic building blocks remains invariant under these symmetries. Our framework specializes to the convolutional dictionary learning problem when we consider translational symmetries. Our procedure for learning such dictionaries relies on representing the symmetry as the action of a matrix group acting on the data, and subsequently introducing a convex penalty function so as to induce sparsity with respect to the collection of matrix group elements. Using properties of positive semidefinite Hermitian Toeplitz matrices, we apply our framework to learn dictionaries that are invariant under continuous shifts. Our numerical experiments on synthetic data and ECG data show that the incorporation of such symmetries as priors are most valuable when the dataset has few data-points, or when the full range of symmetries is inadequately expressed in the dataset.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge