Green Runner: A tool for efficient deep learning component selection

Paper and Code

Jan 29, 2024

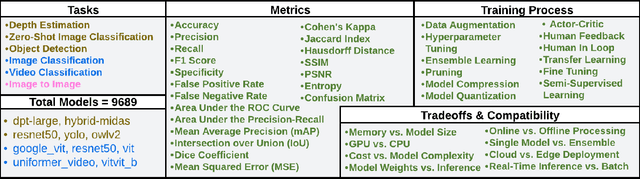

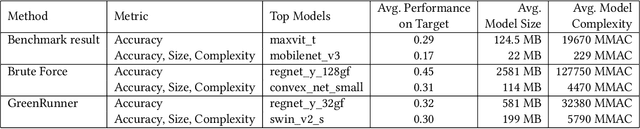

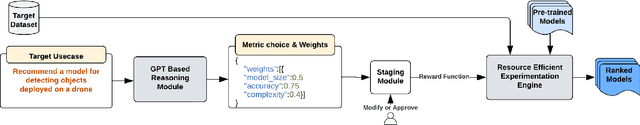

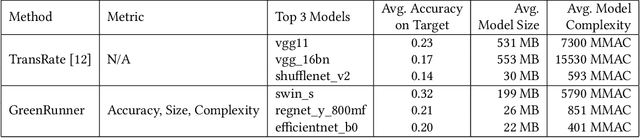

For software that relies on machine-learned functionality, model selection is key to finding the right model for the task with desired performance characteristics. Evaluating a model requires developers to i) select from many models (e.g. the Hugging face model repository), ii) select evaluation metrics and training strategy, and iii) tailor trade-offs based on the problem domain. However, current evaluation approaches are either ad-hoc resulting in sub-optimal model selection or brute force leading to wasted compute. In this work, we present \toolname, a novel tool to automatically select and evaluate models based on the application scenario provided in natural language. We leverage the reasoning capabilities of large language models to propose a training strategy and extract desired trade-offs from a problem description. \toolname~features a resource-efficient experimentation engine that integrates constraints and trade-offs based on the problem into the model selection process. Our preliminary evaluation demonstrates that \toolname{} is both efficient and accurate compared to ad-hoc evaluations and brute force. This work presents an important step toward energy-efficient tools to help reduce the environmental impact caused by the growing demand for software with machine-learned functionality.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge