Gravitational Dimensionality Reduction Using Newtonian Gravity and Einstein's General Relativity

Paper and Code

Oct 30, 2022

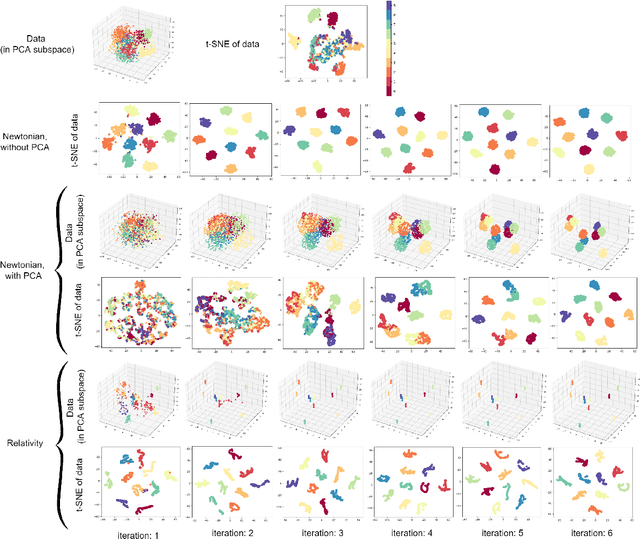

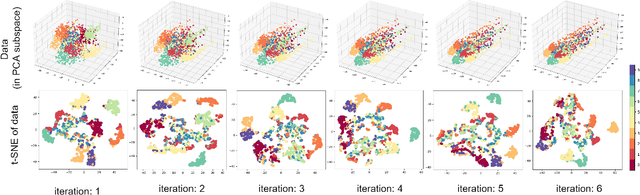

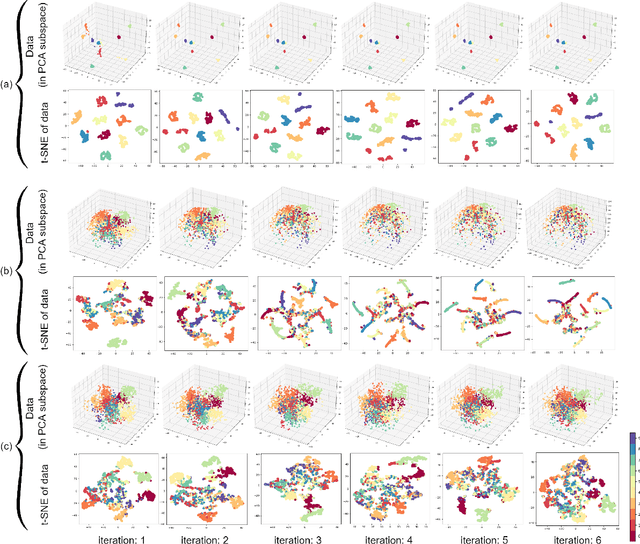

Due to the effectiveness of using machine learning in physics, it has been widely received increased attention in the literature. However, the notion of applying physics in machine learning has not been given much awareness to. This work is a hybrid of physics and machine learning where concepts of physics are used in machine learning. We propose the supervised Gravitational Dimensionality Reduction (GDR) algorithm where the data points of every class are moved to each other for reduction of intra-class variances and better separation of classes. For every data point, the other points are considered to be gravitational particles, such as stars, where the point is attracted to the points of its class by gravity. The data points are first projected onto a spacetime manifold using principal component analysis. We propose two variants of GDR -- one with the Newtonian gravity and one with the Einstein's general relativity. The former uses Newtonian gravity in a straight line between points but the latter moves data points along the geodesics of spacetime manifold. For GDR with relativity gravitation, we use both Schwarzschild and Minkowski metric tensors to cover both general relativity and special relativity. Our simulations show the effectiveness of GDR in discrimination of classes.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge