GRASPA 1.0: GRASPA is a Robot Arm graSping Performance benchmArk

Paper and Code

Feb 12, 2020

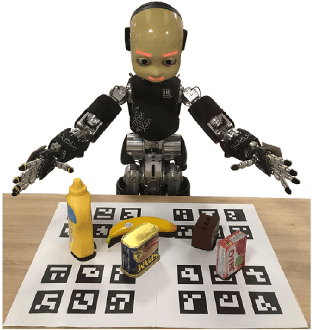

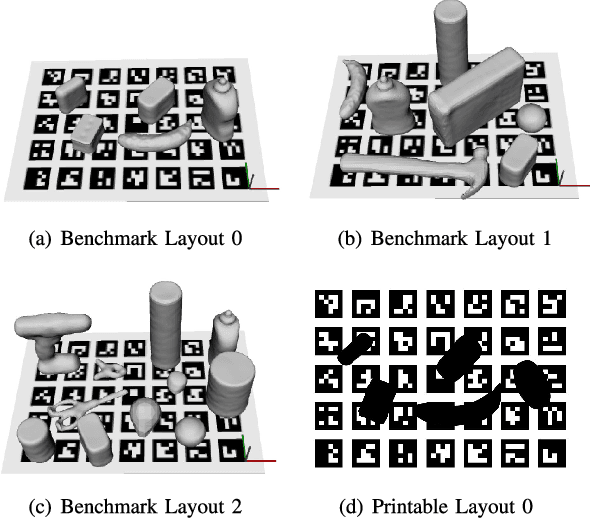

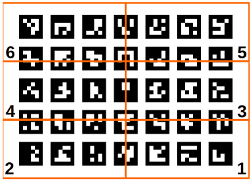

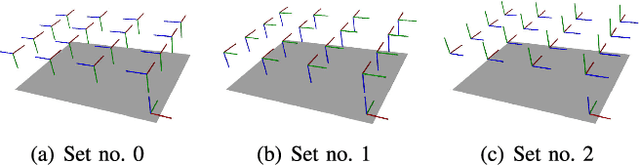

The use of benchmarks is a widespread and scientifically meaningful practice to validate performance of different approaches to the same task. In the context of robot grasping the use of common object sets has emerged in recent years, however no dominant protocols and metrics to test grasping pipelines have taken root yet. In this paper, we present version 1.0 of GRASPA, a benchmark to test effectiveness of grasping pipelines on physical robot setups. This approach tackles the complexity of such pipelines by proposing different metrics that account for the features and limits of the test platform. As an example application, we deploy GRASPA on the iCub humanoid robot and use it to benchmark our grasping pipeline. As closing remarks, we discuss how the GRASPA indicators we obtained as outcome can provide insight into how different steps of the pipeline affect the overall grasping performance.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge