Graph2Kernel Grid-LSTM: A Multi-Cued Model for Pedestrian Trajectory Prediction by Learning Adaptive Neighborhoods

Paper and Code

Jul 08, 2020

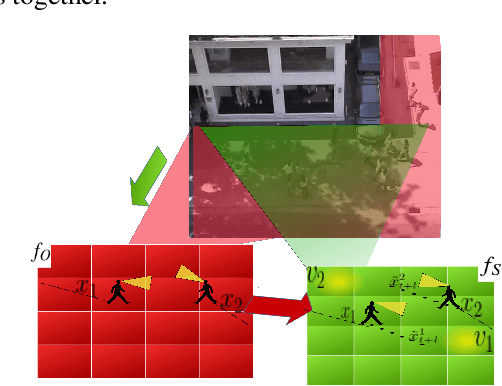

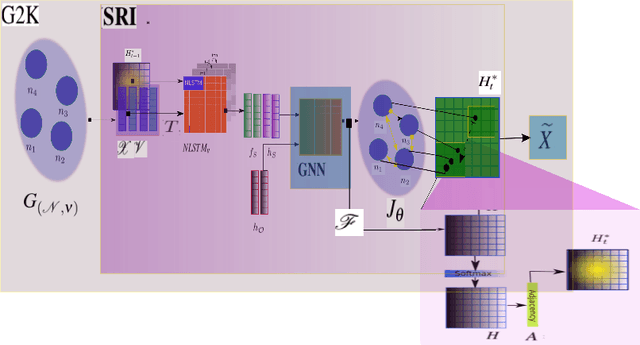

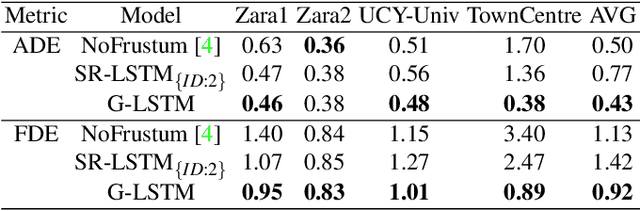

Pedestrian trajectory prediction is a prominent research track that has advanced towards modelling of crowd social and contextual interactions, with extensive usage of Long Short-Term Memory (LSTM) for temporal representation of walking trajectories. Existing approaches use virtual neighborhoods as a fixed grid for pooling social states of pedestrians with tuning process that controls how social interactions are being captured. This entails performance customization to specific scenes but lowers the generalization capability of the approaches. In our work, we deploy \textit{Grid-LSTM}, a recent extension of LSTM, which operates over multidimensional feature inputs. We present a new perspective to interaction modeling by proposing that pedestrian neighborhoods can become adaptive in design. We use \textit{Grid-LSTM} as an encoder to learn about potential future neighborhoods and their influence on pedestrian motion given the visual and the spatial boundaries. Our model outperforms state-of-the-art approaches that collate resembling features over several publicly-tested surveillance videos. The experiment results clearly illustrate the generalization of our approach across datasets that varies in scene features and crowd dynamics.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge