Good and Bad Optimization Models: Insights from Rockafellians

Paper and Code

May 13, 2021

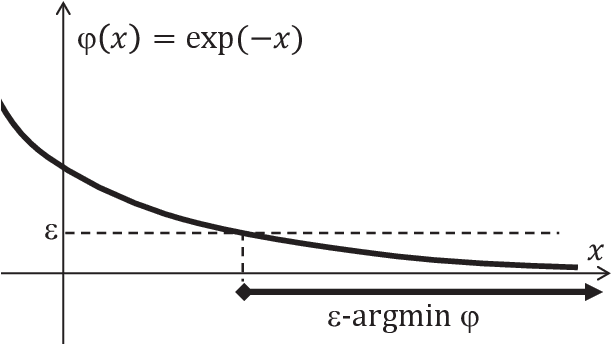

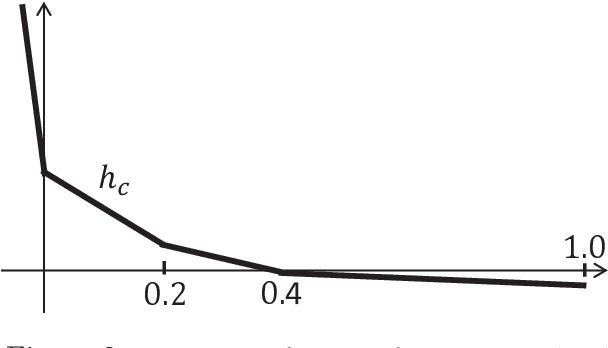

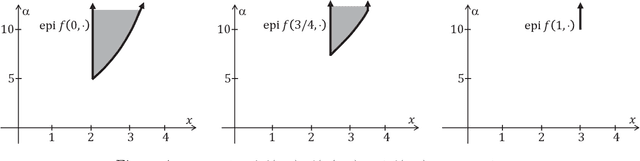

A basic requirement for a mathematical model is often that its solution (output) shouldn't change much if the model's parameters (input) are perturbed. This is important because the exact values of parameters may not be known and one would like to avoid being mislead by an output obtained using incorrect values. Thus, it's rarely enough to address an application by formulating a model, solving the resulting optimization problem and presenting the solution as the answer. One would need to confirm that the model is suitable, i.e., "good," and this can, at least in part, be achieved by considering a family of optimization problems constructed by perturbing parameters of concern. The resulting sensitivity analysis uncovers troubling situations with unstable solutions, which we referred to as "bad" models, and indicates better model formulations. Embedding an actual problem of interest within a family of problems is also a primary path to optimality conditions as well as computationally attractive, alternative problems, which under ideal circumstances, and when properly tuned, may even furnish the minimum value of the actual problem. The tuning of these alternative problems turns out to be intimately tied to finding multipliers in optimality conditions and thus emerges as a main component of several optimization algorithms. In fact, the tuning amounts to solving certain dual optimization problems. In this tutorial, we'll discuss the opportunities and insights afforded by this broad perspective.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge