GFlowNet Fine-tuning for Diverse Correct Solutions in Mathematical Reasoning Tasks

Paper and Code

Oct 26, 2024

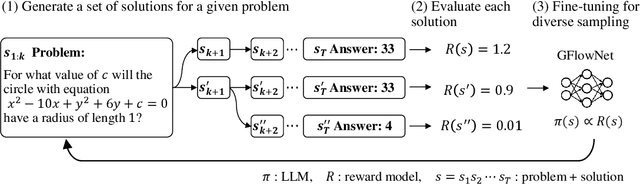

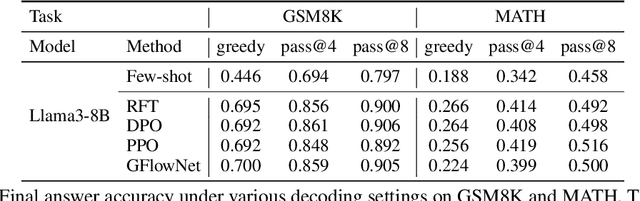

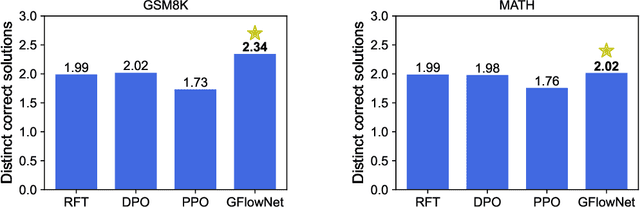

Mathematical reasoning problems are among the most challenging, as they typically require an understanding of fundamental laws to solve. The laws are universal, but the derivation of the final answer changes depending on how a problem is approached. When training large language models (LLMs), learning the capability of generating such multiple solutions is essential to accelerate their use in mathematical education. To this end, we train LLMs using generative flow network (GFlowNet). Different from reward-maximizing reinforcement learning (RL), GFlowNet fine-tuning seeks to find diverse solutions by training the LLM whose distribution is proportional to a reward function. In numerical experiments, we evaluate GFlowNet fine-tuning and reward-maximizing RL in terms of accuracy and diversity. The results show that GFlowNet fine-tuning derives correct final answers from diverse intermediate reasoning steps, indicating the improvement of the capability of alternative solution generation.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge