Getting Topology and Point Cloud Generation to Mesh

Paper and Code

Dec 08, 2019

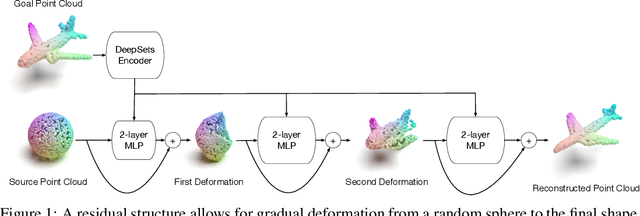

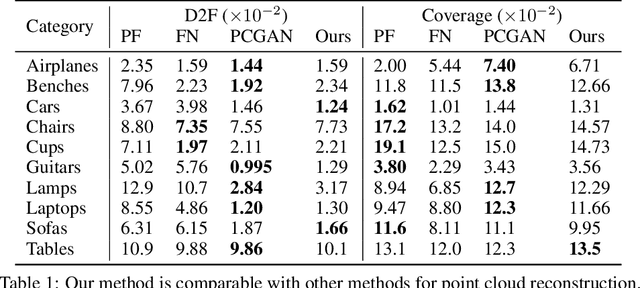

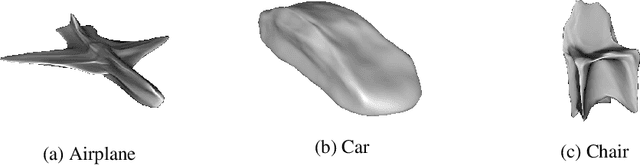

In this work, we explore the idea that effective generative models for point clouds under the autoencoding framework must acknowledge the relationship between a continuous surface, a discretized mesh, and a set of points sampled from the surface. This view motivates a generative model that works by progressively deforming a uniform sphere until it approximates the goal point cloud. We review the underlying concepts leading to this conclusion from computer graphics and topology in differential geometry, and model the generation process as deformation via deep neural network parameterization. Finally, we show that this view of the problem produces a model that can generate quality meshes efficiently.

* Sets & Partitions Workshop at NeurIPS 2019

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge