Generalized Reinforcement Learning: Experience Particles, Action Operator, Reinforcement Field, Memory Association, and Decision Concepts

Paper and Code

Aug 29, 2022

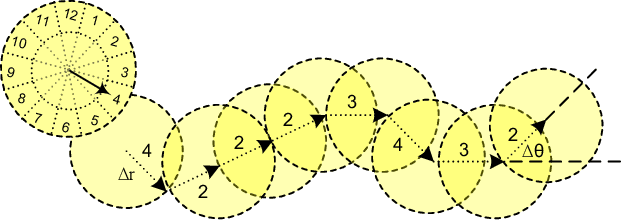

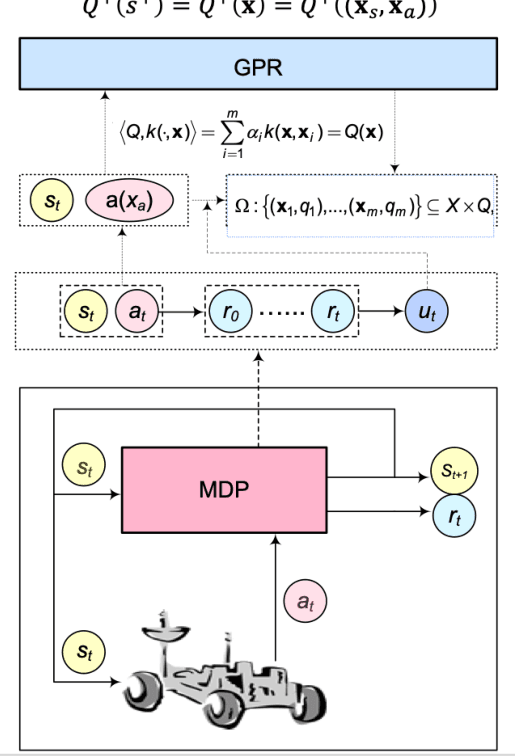

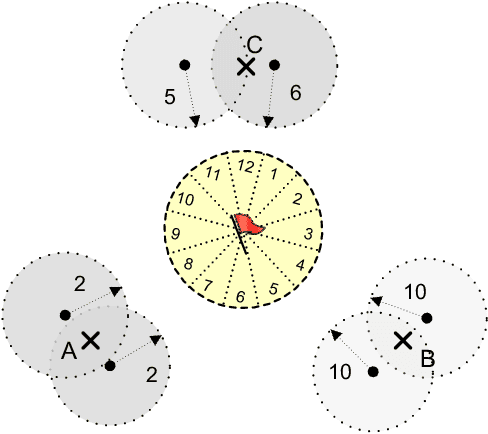

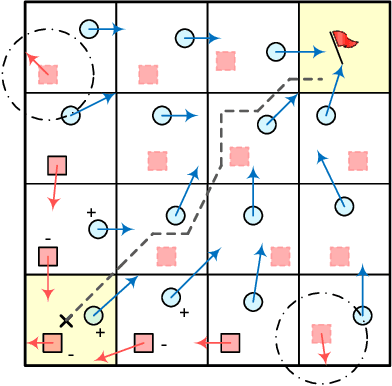

Learning a control policy capable of adapting to time-varying and potentially evolving system dynamics has been a great challenge to the mainstream reinforcement learning (RL). Mainly, the ever-changing system properties would continuously affect how the RL agent interacts with the state space through its actions, which effectively (re-)introduces concept drifts to the underlying policy learning process. We postulated that higher adaptability for the control policy can be achieved by characterizing and representing actions with extra "degrees of freedom" and thereby, with greater flexibility, adjusts to variations from the action's "behavioral" outcomes, including how these actions get carried out in real time and the shift in the action set itself. This paper proposes a Bayesian-flavored generalized RL framework by first establishing the notion of parametric action model to better cope with uncertainty and fluid action behaviors, followed by introducing the notion of reinforcement field as a physics-inspired construct established through "polarized experience particles" maintained in the RL agent's working memory. These particles effectively encode the agent's dynamic learning experience that evolves over time in a self-organizing way. Using the reinforcement field as a substrate, we will further generalize the policy search to incorporate high-level decision concepts by viewing the past memory as an implicit graph structure, in which the memory instances, or particles, are interconnected with their degrees of associability/similarity defined and quantified such that the "associative memory" principle can be consistently applied to establish and augment the learning agent's evolving world model.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge