Generalized Label Shift Correction via Minimum Uncertainty Principle: Theory and Algorithm

Paper and Code

Feb 26, 2022

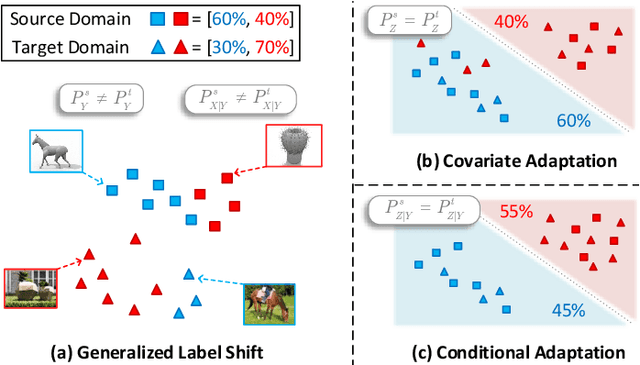

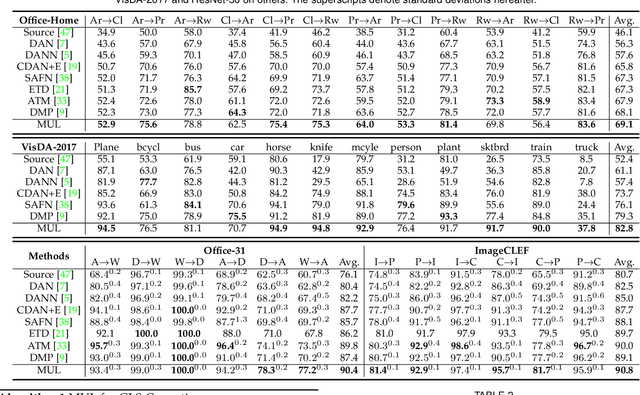

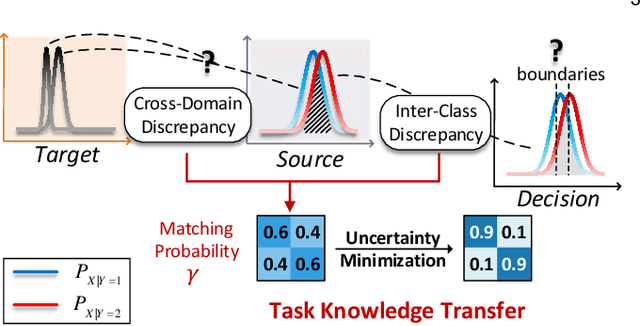

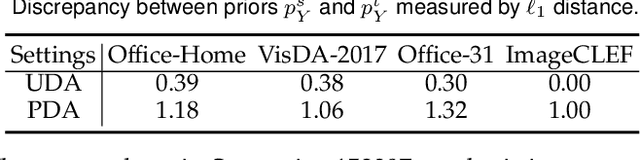

As a fundamental problem in machine learning, dataset shift induces a paradigm to learn and transfer knowledge under changing environment. Previous methods assume the changes are induced by covariate, which is less practical for complex real-world data. We consider the Generalized Label Shift (GLS), which provides an interpretable insight into the learning and transfer of desirable knowledge. Current GLS methods: 1) are not well-connected with the statistical learning theory; 2) usually assume the shifting conditional distributions will be matched with an implicit transformation, but its explicit modeling is unexplored. In this paper, we propose a conditional adaptation framework to deal with these challenges. From the perspective of learning theory, we prove that the generalization error of conditional adaptation is lower than previous covariate adaptation. Following the theoretical results, we propose the minimum uncertainty principle to learn conditional invariant transformation via discrepancy optimization. Specifically, we propose the \textit{conditional metric operator} on Hilbert space to characterize the distinctness of conditional distributions. For finite observations, we prove that the empirical estimation is always well-defined and will converge to underlying truth as sample size increases. The results of extensive experiments demonstrate that the proposed model achieves competitive performance under different GLS scenarios.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge