Generalization in the Face of Adaptivity: A Bayesian Perspective

Paper and Code

Jun 20, 2021

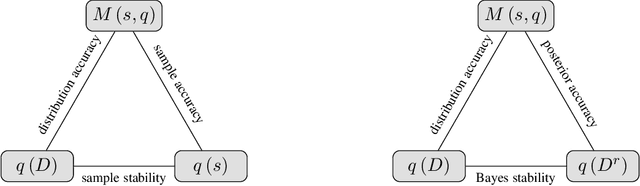

Repeated use of a data sample via adaptively chosen queries can rapidly lead to overfitting, wherein the issued queries yield answers on the sample that differ wildly from the values of those queries on the underlying data distribution. Differential privacy provides a tool to ensure generalization despite adaptively-chosen queries, but its worst-case nature means that it cannot, for example, yield improved results for low-variance queries. In this paper, we give a simple new characterization that illuminates the core problem of adaptive data analysis. We show explicitly that the harms of adaptivity come from the covariance between the behavior of future queries and a Bayes factor-based measure of how much information about the data sample was encoded in the responses given to past queries. We leverage this intuition to introduce a new stability notion; we then use it to prove new generalization results for the most basic noise-addition mechanisms (Laplace and Gaussian noise addition), with guarantees that scale with the variance of the queries rather than the square of their range. Our characterization opens the door to new insights and new algorithms for the fundamental problem of achieving generalization in adaptive data analysis.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge