Generalization Error of GAN from the Discriminator's Perspective

Paper and Code

Jul 08, 2021

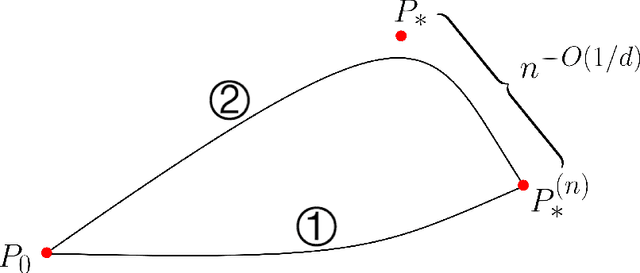

The generative adversarial network (GAN) is a well-known model for learning high-dimensional distributions, but the mechanism for its generalization ability is not understood. In particular, GAN is vulnerable to the memorization phenomenon, the eventual convergence to the empirical distribution. We consider a simplified GAN model with the generator replaced by a density, and analyze how the discriminator contributes to generalization. We show that with early stopping, the generalization error measured by Wasserstein metric escapes from the curse of dimensionality, despite that in the long term, memorization is inevitable. In addition, we present a hardness of learning result for WGAN.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge