Generalization Bounds For Unsupervised and Semi-Supervised Learning With Autoencoders

Paper and Code

Feb 04, 2019

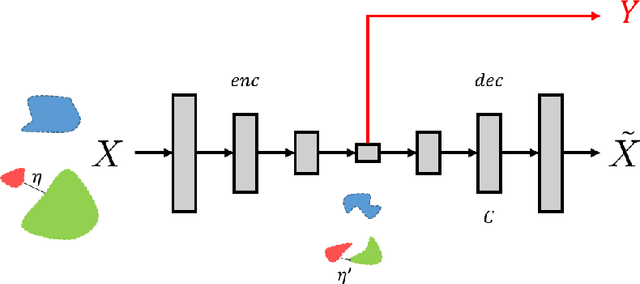

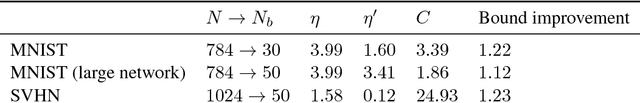

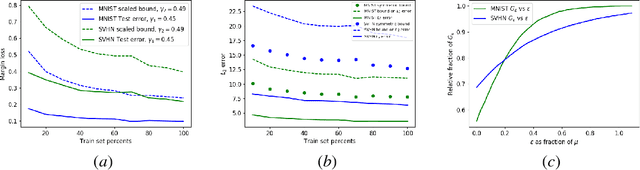

Autoencoders are widely used for unsupervised learning and as a regularization scheme in semi-supervised learning. However, theoretical understanding of their generalization properties and of the manner in which they can assist supervised learning has been lacking. We utilize recent advances in the theory of deep learning generalization, together with a novel reconstruction loss, to provide generalization bounds for autoencoders. To the best of our knowledge, this is the first such bound. We further show that, under appropriate assumptions, an autoencoder with good generalization properties can improve any semi-supervised learning scheme. We support our theoretical results with empirical demonstrations.

* Submitted to COLT 2019

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge