GADFormer: An Attention-based Model for Group Anomaly Detection on Trajectories

Paper and Code

Mar 17, 2023

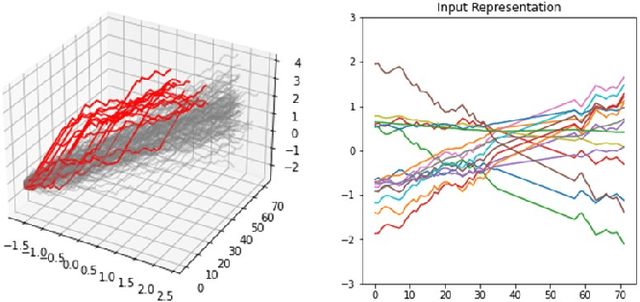

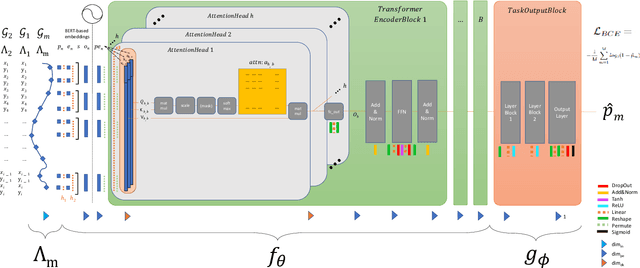

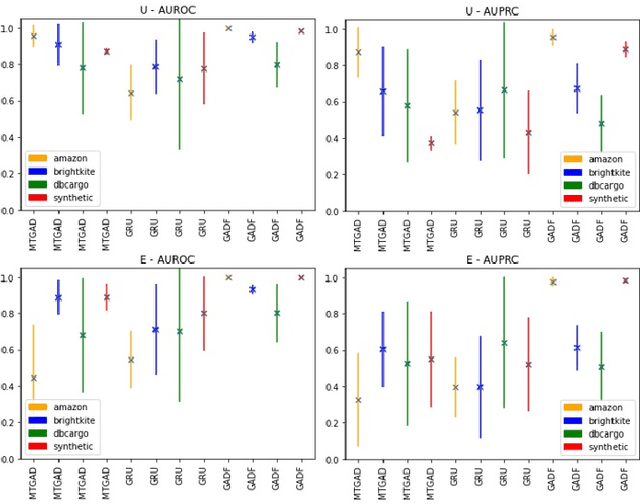

Group Anomaly Detection (GAD) reveals anomalous behavior among groups consisting of multiple member instances, which are, individually considered, not necessarily anomalous. This task is of major importance across multiple disciplines, in which also sequences like trajectories can be considered as a group. However, with increasing amount and heterogenity of group members, actual abnormal groups get harder to detect, especially in an unsupervised or semi-supervised setting. Recurrent Neural Networks are well established deep sequence models, but recent works have shown that their performance can decrease with increasing sequence lengths. Hence, we introduce with this paper GADFormer, a GAD specific BERT architecture, capable to perform attention-based Group Anomaly Detection on trajectories in an unsupervised and semi-supervised setting. We show formally and experimentally how trajectory outlier detection can be realized as an attention-based Group Anomaly Detection problem. Furthermore, we introduce a Block Attention-anomaly Score (BAS) to improve the interpretability of transformer encoder blocks for GAD. In addition to that, synthetic trajectory generation allows us to optimize the training for domain-specific GAD. In extensive experiments we investigate our approach versus GRU in their robustness for trajectory noise and novelties on synthetic and real world datasets.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge